Now it is quite a long time (almost two months) that I was working on FCN32 for semantic segmentation of single channel images. I played around with different learning rates and even adding BatchNormalization layer. However, I was not successful to even see any output. I did not have any choice except to instantly ask for help here. I really do not know what I am doing wrong.

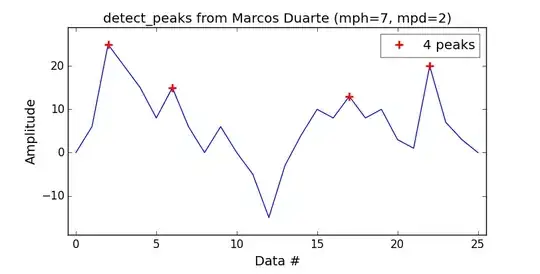

I am sending one image to the network as a batch.This the train-loss curve LR=1e-9 and lr_policy="fixed":

I increased the learning rate to 1e-4(the following figure). It seems that loss is falling down, however, the learning curve is not acting normal.

I reduced the layers of original FCN as follows: (1) Conv64 – ReLU – Conv64 – ReLU – MaxPool

(2) Conv128 – ReLU – Conv128 – ReLU – MaxPool

(3) Conv256 – ReLU – Conv256 – ReLU – MaxPool

(4) Conv4096 – ReLU – Dropout0.5

(5) Conv4096 – ReLU – Dropout0.5

(6) Conv2

(7) Deconv32x – Crop

(8) SoftmaxWithLoss

layer {

name: "data"

type: "Data"

top: "data"

include {

phase: TRAIN

}

transform_param {

mean_file: "/jjj/FCN32_mean.binaryproto"

}

data_param {

source: "/jjj/train_lmdb/"

batch_size: 1

backend: LMDB

}

}

layer {

name: "label"

type: "Data"

top: "label"

include {

phase: TRAIN

}

data_param {

source: "/jjj/train_label_lmdb/"

batch_size: 1

backend: LMDB

}

}

layer {

name: "data"

type: "Data"

top: "data"

include {

phase: TEST

}

transform_param {

mean_file: "/jjj/FCN32_mean.binaryproto"

}

data_param {

source: "/jjj/val_lmdb/"

batch_size: 1

backend: LMDB

}

}

layer {

name: "label"

type: "Data"

top: "label"

include {

phase: TEST

}

data_param {

source: "/jjj/val_label_lmdb/"

batch_size: 1

backend: LMDB

}

}

layer {

name: "conv1_1"

type: "Convolution"

bottom: "data"

top: "conv1_1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 64

pad: 100

kernel_size: 3

stride: 1

}

}

layer {

name: "relu1_1"

type: "ReLU"

bottom: "conv1_1"

top: "conv1_1"

}

layer {

name: "conv1_2"

type: "Convolution"

bottom: "conv1_1"

top: "conv1_2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 64

pad: 1

kernel_size: 3

stride: 1

}

}

layer {

name: "relu1_2"

type: "ReLU"

bottom: "conv1_2"

top: "conv1_2"

}

layer {

name: "pool1"

type: "Pooling"

bottom: "conv1_2"

top: "pool1"

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

}

}

layer {

name: "conv2_1"

type: "Convolution"

bottom: "pool1"

top: "conv2_1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 128

pad: 1

kernel_size: 3

stride: 1

}

}

layer {

name: "relu2_1"

type: "ReLU"

bottom: "conv2_1"

top: "conv2_1"

}

layer {

name: "conv2_2"

type: "Convolution"

bottom: "conv2_1"

top: "conv2_2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 128

pad: 1

kernel_size: 3

stride: 1

}

}

layer {

name: "relu2_2"

type: "ReLU"

bottom: "conv2_2"

top: "conv2_2"

}

layer {

name: "pool2"

type: "Pooling"

bottom: "conv2_2"

top: "pool2"

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

}

}

layer {

name: "conv3_1"

type: "Convolution"

bottom: "pool2"

top: "conv3_1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 256

pad: 1

kernel_size: 3

stride: 1

}

}

layer {

name: "relu3_1"

type: "ReLU"

bottom: "conv3_1"

top: "conv3_1"

}

layer {

name: "conv3_2"

type: "Convolution"

bottom: "conv3_1"

top: "conv3_2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 256

pad: 1

kernel_size: 3

stride: 1

}

}

layer {

name: "relu3_2"

type: "ReLU"

bottom: "conv3_2"

top: "conv3_2"

}

layer {

name: "pool3"

type: "Pooling"

bottom: "conv3_2"

top: "pool3"

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

}

}

layer {

name: "fc6"

type: "Convolution"

bottom: "pool3"

top: "fc6"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 4096

pad: 0

kernel_size: 7

stride: 1

}

}

layer {

name: "relu6"

type: "ReLU"

bottom: "fc6"

top: "fc6"

}

layer {

name: "drop6"

type: "Dropout"

bottom: "fc6"

top: "fc6"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "fc7"

type: "Convolution"

bottom: "fc6"

top: "fc7"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 4096

pad: 0

kernel_size: 1

stride: 1

}

}

layer {

name: "relu7"

type: "ReLU"

bottom: "fc7"

top: "fc7"

}

layer {

name: "drop7"

type: "Dropout"

bottom: "fc7"

top: "fc7"

dropout_param {

dropout_ratio: 0.5

}

}

layer {

name: "score_fr"

type: "Convolution"

bottom: "fc7"

top: "score_fr"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 5 #21

pad: 0

kernel_size: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "upscore"

type: "Deconvolution"

bottom: "score_fr"

top: "upscore"

param {

lr_mult: 0

}

convolution_param {

num_output: 5 #21

bias_term: false

kernel_size: 64

stride: 32

group: 5 #2

weight_filler: {

type: "bilinear"

}

}

}

layer {

name: "score"

type: "Crop"

bottom: "upscore"

bottom: "data"

top: "score"

crop_param {

axis: 2

offset: 19

}

}

layer {

name: "accuracy"

type: "Accuracy"

bottom: "score"

bottom: "label"

top: "accuracy"

include {

phase: TRAIN

}

}

layer {

name: "accuracy"

type: "Accuracy"

bottom: "score"

bottom: "label"

top: "accuracy"

include {

phase: TEST

}

}

layer {

name: "loss"

type: "SoftmaxWithLoss"

bottom: "score"

bottom: "label"

top: "loss"

loss_param {

ignore_label: 255

normalize: true

}

}

and this is the solver definition:

net: "train_val.prototxt"

#test_net: "val.prototxt"

test_iter: 736

# make test net, but don't invoke it from the solver itself

test_interval: 2000 #1000000

display: 50

average_loss: 50

lr_policy: "step" #"fixed"

stepsize: 2000 #+

gamma: 0.1 #+

# lr for unnormalized softmax

base_lr: 0.0001

# high momentum

momentum: 0.99

# no gradient accumulation

iter_size: 1

max_iter: 10000

weight_decay: 0.0005

snapshot: 2000

snapshot_prefix: "snapshot/NET1"

test_initialization: false

solver_mode: GPU

At the beginning, the loss is starting to fall down, but again after some iterations, it is not showing good learning behavior:

I am a beginner in deep learning and caffe. I really do not understand why this happens. I really appreciate if those that have expertise, please have a look on the model definition and I will be very thankful if you help me.