I am trying to implement something similar to this using openCV

However, I am running into some walls (probably due to my own ignorance in working with OpenCV).

When I try to perform a distance transform on my image, I am not getting the expected result at all.

This is the original image I am working with

This is the image I get after doing some cleanup with opencv

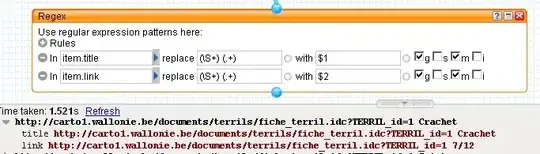

This is the wierdness I get after trying to run a distance transform on the above image. My understanding is that this should look more like a blurry heatmap. If I follow the opencv example passed this point and try to run a threshold to find the distance peaks, I get nothing but a black image.

This is the code thus far that I have cobbled together using a few different opencv examples

cv::Mat outerBox = cv::Mat(matImage.size(), CV_8UC1);

cv::Mat kernel = (cv::Mat_<uchar>(3,3) << 0,1,0,1,1,1,0,1,0);

for(int x = 0; x < 3; x++) {

cv::GaussianBlur(matImage, matImage, cv::Size(11,11), 0);

cv::adaptiveThreshold(matImage, outerBox, 255, cv::ADAPTIVE_THRESH_MEAN_C, cv::THRESH_BINARY, 5, 2);

cv::bitwise_not(outerBox, outerBox);

cv::dilate(outerBox, outerBox, kernel);

cv::dilate(outerBox, outerBox, kernel);

removeBlobs(outerBox, 1);

erode(outerBox, outerBox, kernel);

}

cv::Mat dist;

cv::bitwise_not(outerBox, outerBox);

distanceTransform(outerBox, dist, cv::DIST_L2, 5);

// Normalize the distance image for range = {0.0, 1.0}

// so we can visualize and threshold it

normalize(dist, dist, 0, 1., cv::NORM_MINMAX);

//using a threshold at this point like the opencv example shows to find peaks just returns a black image right now

//threshold(dist, dist, .4, 1., CV_THRESH_BINARY);

//cv::Mat kernel1 = cv::Mat::ones(3, 3, CV_8UC1);

//dilate(dist, dist, kernel1);

self.mainImage.image = [UIImage fromCVMat:outerBox];

void removeBlobs(cv::Mat &outerBox, int iterations) {

int count=0;

int max=-1;

cv::Point maxPt;

for(int iteration = 0; iteration < iterations; iteration++) {

for(int y=0;y<outerBox.size().height;y++)

{

uchar *row = outerBox.ptr(y);

for(int x=0;x<outerBox.size().width;x++)

{

if(row[x]>=128)

{

int area = floodFill(outerBox, cv::Point(x,y), CV_RGB(0,0,64));

if(area>max)

{

maxPt = cv::Point(x,y);

max = area;

}

}

}

}

floodFill(outerBox, maxPt, CV_RGB(255,255,255));

for(int y=0;y<outerBox.size().height;y++)

{

uchar *row = outerBox.ptr(y);

for(int x=0;x<outerBox.size().width;x++)

{

if(row[x]==64 && x!=maxPt.x && y!=maxPt.y)

{

int area = floodFill(outerBox, cv::Point(x,y), CV_RGB(0,0,0));

}

}

}

}

}

I've been banging my head on this for a few hours and I am totally stuck in the mud on it, so any help would be greatly appreciated. This is a little bit out of my depth, and I feel like I am probably just making some basic mistake somewhere without realizing it.

EDIT:

Using the same code as above running OpenCV for Mac (not iOS) I get the expected results

This seems to indicate that the issue is with the Mat -> UIImage bridging that OpenCV suggests using. I am going to push forward using the Mac library to test my code, but it would sure be nice to be able to get consistent results from the UIImage bridging as well.

+ (UIImage*)fromCVMat:(const cv::Mat&)cvMat

{

// (1) Construct the correct color space

CGColorSpaceRef colorSpace;

if ( cvMat.channels() == 1 ) {

colorSpace = CGColorSpaceCreateDeviceGray();

} else {

colorSpace = CGColorSpaceCreateDeviceRGB();

}

// (2) Create image data reference

CFDataRef data = CFDataCreate(kCFAllocatorDefault, cvMat.data, (cvMat.elemSize() * cvMat.total()));

// (3) Create CGImage from cv::Mat container

CGDataProviderRef provider = CGDataProviderCreateWithCFData(data);

CGImageRef imageRef = CGImageCreate(cvMat.cols,

cvMat.rows,

8,

8 * cvMat.elemSize(),

cvMat.step[0],

colorSpace,

kCGImageAlphaNone | kCGBitmapByteOrderDefault,

provider,

NULL,

false,

kCGRenderingIntentDefault);

// (4) Create UIImage from CGImage

UIImage * finalImage = [UIImage imageWithCGImage:imageRef];

// (5) Release the references

CGImageRelease(imageRef);

CGDataProviderRelease(provider);

CFRelease(data);

CGColorSpaceRelease(colorSpace);

// (6) Return the UIImage instance

return finalImage;

}