How to calculate RCU and WCU with the data given as: reading throughput of 32 GB/s and writing throughput of 16 GB/s.

-

How large is each *item* (row) in your table? When reading, are you using a Query on the Partition Key, or a full scan? – John Rotenstein Feb 27 '17 at 23:26

-

Just wanted to calculate the number of partitions for a table of 20 GB data to have throughput provisioning with reading throughput of 32 GB/s and writing throughput of 16 GB/s, that was the question, so I was confused about computing RCU and WCU with this given data – pavikirthi Feb 28 '17 at 16:39

2 Answers

DynamoDB Provisioned Throughput is based upon a certain size of units, and the number of items being written:

In DynamoDB, you specify provisioned throughput requirements in terms of capacity units. Use the following guidelines to determine your provisioned throughput:

- One read capacity unit represents one strongly consistent read per second, or two eventually consistent reads per second, for items up to 4 KB in size. If you need to read an item that is larger than 4 KB, DynamoDB will need to consume additional read capacity units. The total number of read capacity units required depends on the item size, and whether you want an eventually consistent or strongly consistent read.

- One write capacity unit represents one write per second for items up to 1 KB in size. If you need to write an item that is larger than 1 KB, DynamoDB will need to consume additional write capacity units. The total number of write capacity units required depends on the item size.

Therefore, when determining your desired capacity, you need to know how many items you wish to read and write per second, and the size of those items.

Rather than seeking a particular GB/s, you should be seeking a given number of items that you wish to read/write per second. That is the functionality that your application would require to meet operational performance.

There are also some DynamoDB limits that would apply, but these can be changed upon request:

- US East (N. Virginia) Region:

- Per table – 40,000 read capacity units and 40,000 write capacity units

- Per account – 80,000 read capacity units and 80,000 write capacity units

- All Other Regions:

- Per table – 10,000 read capacity units and 10,000 write capacity units

- Per account – 20,000 read capacity units and 20,000 write capacity units

At 40,000 read capacity units x 4KB x 2 (eventually consistent) = 320MB/s

If my calculations are correct, your requirements are 100x this amount, so it would appear that DynamoDB is not an appropriate solution for such high throughputs.

Are your speeds correct?

Then comes the question of how you are generating so much data per second. A full-duplex 10GFC fiber runs at 2550MB/s, so you would need multiple fiber connections to transmit such data if it is going into/out of the AWS cloud.

Even 10Gb Ethernet only provides 10Gbit/s, so transferring 32GB would require 28 seconds -- and that's to transmit one second of data!

Bottom line: Your data requirements are super high. Are you sure they are realistic?

- 18,137

- 13

- 50

- 91

- 241,921

- 22

- 380

- 470

-

1That's very intuitive and that was a class question and I guess it wasn't realistic – pavikirthi Mar 01 '17 at 15:17

-

11 Read Capacity Unit represents only one read per second for items up to 4KB? For the free tier, it says 25 Units of Read Capacity, and also say "enough to handle 200M requests a month". 25x86400x30≈65 million. Where's the disconnect between 200M and 65M? – Old Geezer Mar 12 '18 at 02:49

-

3@OldGeezer See [Clarification on DynamoDB Free Tier Usage](https://acloud.guru/forums/aws-certified-developer-associate/discussion/-KvFDbwj7qK8yoPmJaWe/Clarification%20on%20DynamoDB%20Free%20Tier%20Usage). In future, please start a new Question rather than asking via a comment on an old Question. – John Rotenstein Mar 12 '18 at 04:03

-

20,000 writes per second sounds super low. I've seen a benchmark for MySQL where the baseline was ~220,000 INSERT statements per second. – Martin Thoma Aug 15 '19 at 07:08

-

1Looking at some classical solution architect questions, the correct answer seems to imply the sizing is per second but the limits are on the average. For example, if you need to perform 20 consistent reads every 10 seconds, you need 1 capacity unit. What if you perform all the reads in the same second? – Edmondo Aug 24 '19 at 11:46

-

Here's an article from somebody who obviously took a deep dive on calculating RCUs/WCUs depending on the item size: https://medium.com/@zaccharles/calculating-a-dynamodb-items-size-and-consumed-capacity-d1728942eb7c – dwytrykus Apr 27 '20 at 09:50

-

So, if i make scan operations on a table of 1000 items of 3K -> 1000 capacity units ??? If I make a get_item by PK -> 1 capacity unit – Jorge Tovar Apr 30 '20 at 20:52

if you click on capacity tab of your dynamodb table there is a capacity calcuator link next to Estimated cost. you can use that to determine the read and write capacity units along with estimated cost.

read capacity units are dependent on the type of read that you need (strongly consistent/eventually consistent), item size and throughput that you desire.

write capacity units are determined by throughput and item size only.

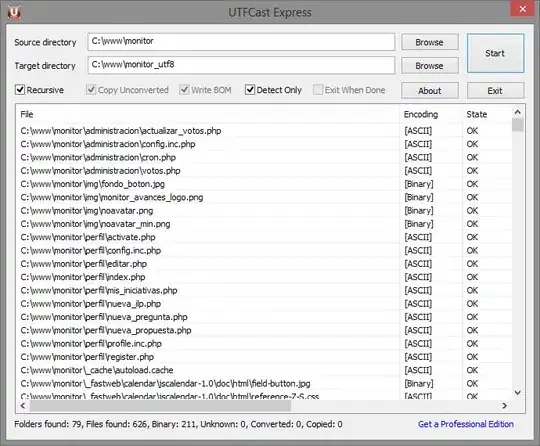

for calculating item size you can refer this and below is a screenshot of the calculator

- 925

- 7

- 17