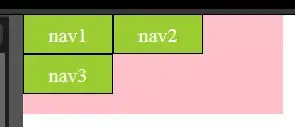

I am trying to do regression in Tensorflow. I'm not positive I am calculating R^2 correctly as Tensorflow gives me a different answer than sklearn.metrics.r2_score Can someone please look at my below code and let me know if I implemented the pictured equation correctly. Thanks

total_error = tf.square(tf.sub(y, tf.reduce_mean(y)))

unexplained_error = tf.square(tf.sub(y, prediction))

R_squared = tf.reduce_mean(tf.sub(tf.div(unexplained_error, total_error), 1.0))

R = tf.mul(tf.sign(R_squared),tf.sqrt(tf.abs(R_squared)))