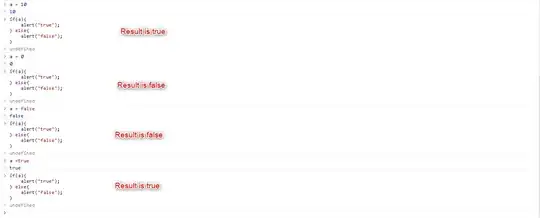

I have trained FCN32 for semantic segmentation from scratch for my data, and I got the following output:

As it can be seen, this is not a good learning curve showing an improper training on data.

solver is as follows:

net: "train_val.prototxt"

#test_net: "val.prototxt"

test_iter: 5105 #736

# make test net, but don't invoke it from the solver itself

test_interval: 1000000 #20000

display: 50

average_loss: 50

lr_policy: "step" #"fixed"

stepsize: 50000 #+

gamma: 0.1 #+

# lr for unnormalized softmax

base_lr: 1e-10

# high momentum

momentum: 0.99

# no gradient accumulation

iter_size: 1

max_iter: 600000

weight_decay: 0.0005

snapshot: 30000

snapshot_prefix: "snapshot/FCN32s_CNN1"

test_initialization: false

solver_mode: GPU

after changing the learning rate to 0.001, it became worse:

I am wondering what can I do for improving the training? Thanks

I am wondering what can I do for improving the training? Thanks