I've got an Azure webjob with several queue-triggered functions. The SDK documentation at https://learn.microsoft.com/en-us/azure/app-service-web/websites-dotnet-webjobs-sdk-storage-queues-how-to#config defines the MaxDequeueCount property as:

The maximum number of retries before a queue message is sent to a poison queue (default is 5).

but I'm not seeing this behavior. In my webjob I've got:

JobHostConfiguration config = new JobHostConfiguration();

config.Queues.MaxDequeueCount = 1;

JobHost host = new JobHost(config);

host.RunAndBlock();

and then I've got a queue-triggered function in which I throw an exception:

public void ProcessQueueMessage([QueueTrigger("azurewejobtestingqueue")] string item, TextWriter logger)

{

if ( item == "exception" )

{

throw new Exception();

}

}

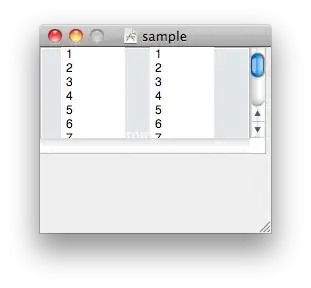

Looking at the webjobs dashboard I see that the SDK makes 5 attempts (5 is the default as stated above):

and after the 5th attempt the message is moved to the poison queue. I expect to see 1 retry (or no retries?) not 5.

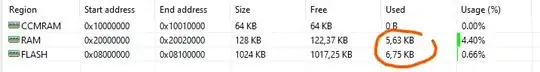

UPDATE: Enabled detailed logging for the web app and opted to save those logs to an Azure blob container. Found some logs relevant to my problem located in the azure-jobs-host-archive container. Here's an example showing an item with a dequeue count of 96:

{

"Type": "FunctionCompleted",

"EndTime": "2017-02-22T00:07:40.8133081+00:00",

"Failure": {

"ExceptionType": "Microsoft.Azure.WebJobs.Host.FunctionInvocationException",

"ExceptionDetails": "Microsoft.Azure.WebJobs.Host.FunctionInvocationException: Exception while executing function: ItemProcessor.ProcessQueueMessage ---> MyApp.Exceptions.MySpecialAppExceptionType: Exception of type 'MyApp.Exceptions.MySpecialAppExceptionType' was thrown.

},

"ParameterLogs": {},

"FunctionInstanceId": "1ffac7b0-1290-4343-8ee1-2af0d39ae2c9",

"Function": {

"Id": "MyApp.Processors.ItemProcessor.ProcessQueueMessage",

"FullName": "MyApp.Processors.ItemProcessor.ProcessQueueMessage",

"ShortName": "ItemProcessor.ProcessQueueMessage",

"Parameters": [

{

"Type": "QueueTrigger",

"AccountName": "MyStorageAccount",

"QueueName": "stuff-processor",

"Name": "sourceFeedItemQueueItem"

},

{

"Type": "BindingData",

"Name": "dequeueCount"

},

{

"Type": "ParameterDescriptor",

"Name": "logger"

}

]

},

"Arguments": {

"sourceFeedItemQueueItem": "{\"SourceFeedUpdateID\":437530,\"PodcastFeedID\":\"2d48D2sf2\"}",

"dequeueCount": "96",

"logger": null

},

"Reason": "AutomaticTrigger",

"ReasonDetails": "New queue message detected on 'stuff-processor'.",

"StartTime": "2017-02-22T00:07:40.6017341+00:00",

"OutputBlob": {

"ContainerName": "azure-webjobs-hosts",

"BlobName": "output-logs/1ffd3c7b012c043438ed12af0d39ae2c9.txt"

},

"ParameterLogBlob": {

"ContainerName": "azure-webjobs-hosts",

"BlobName": "output-logs/1cf2c1b012sa0d3438ee12daf0d39ae2c9.params.txt"

},

"LogLevel": "Info",

"HostInstanceId": "d1825bdb-d92a-4657-81a4-36253e01ea5e",

"HostDisplayName": "ItemProcessor",

"SharedQueueName": "azure-webjobs-host-490daea03c70316f8aa2509438afe8ef",

"InstanceQueueName": "azure-webjobs-host-d18252sdbd92a4657d1a436253e01ea5e",

"Heartbeat": {

"SharedContainerName": "azure-webjobs-hosts",

"SharedDirectoryName": "heartbeats/490baea03cfdfd0416f8aa25aqr438afe8ef",

"InstanceBlobName": "zd1825bdbdsdgga465781a436q53e01ea5e",

"ExpirationInSeconds": 45

},

"WebJobRunIdentifier": {

"WebSiteName": "myappengine",

"JobType": "Continuous",

"JobName": "ItemProcessor",

"RunId": ""

}

}

What I'm further looking for though are logs which would show me detail for a particular queue item where processing succeeds (and hence is removed from the queue) or fails due to an exception and is placed in the poison queue. I so far haven't found any logs showing that detail. The log files referenced in the output above do not contain data of this sort.

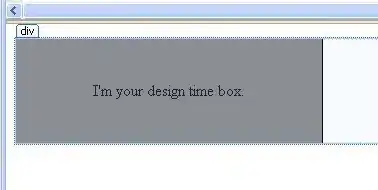

UPDATE 2: Looked at the state of my poison queue and it seems like it could be a smoking gun but I'm too dense to put 2 and 2 together. Looking at the screenshot of the queue below you can see the message with the ID (left column) 431210 in there many times. The fact that it appears multiple times says to me that the message in the original queue is failing improperly.