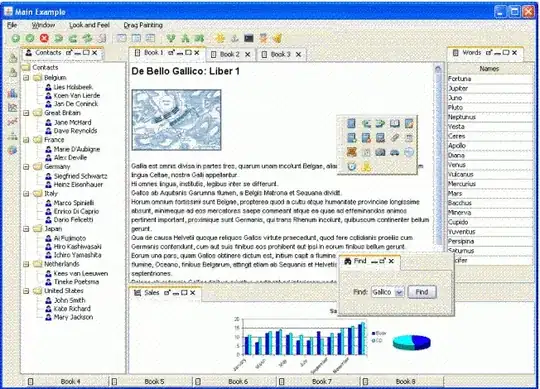

I am using a CNN for a regression task. I use Tensorflow and the optimizer is Adam. The network seems to converge perfectly fine till one point where the loss suddenly increases along with the validation error. Here are the loss plots of the labels and the weights separated (Optimizer is run on the sum of them)

I use l2 loss for weight regularization and also for the labels. I apply some randomness on the training data. I am currently trying RSMProp to see if the behavior changes but it takes at least 8h to reproduce the error.

I would like to understand how this can happen. Hope you can help me.