My professor just taught us that any operation that halves the length of the input has an O(log(n)) complexity as a thumb rule. Why is it not O(sqrt(n)), aren't both of them equivalent?

-

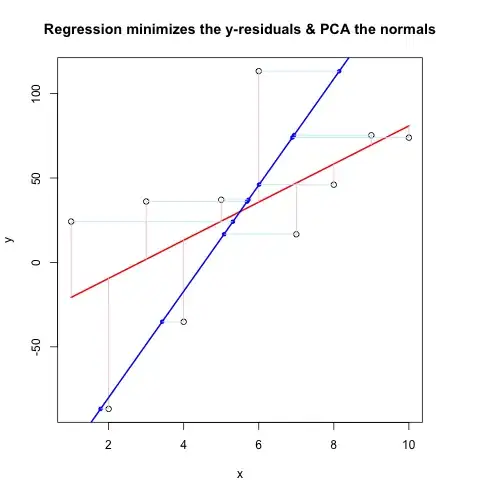

10Plot the graphs of `log(n)` and `sqrt(n)` up to about `n==1000`, see if you still think they are equivalent, whatever you mean by that. – High Performance Mark Feb 04 '17 at 08:53

-

2log(1) = 0 and sqrt(1) = 1 – Sung Feb 04 '17 at 09:02

-

10Sorry I don't know what I was thinking, all answers are extremely informative though. Thank you – white_tree Feb 04 '17 at 13:46

-

1Is `log(n)` equivalent to `sqrt(n)`? differing only by a constant factor? – user207421 Mar 19 '21 at 02:27

8 Answers

They are not equivalent: sqrt(N) will increase a lot more quickly than log2(N). There is no constant C so that you would have sqrt(N) < C.log(N) for all values of N greater than some minimum value.

An easy way to grasp this, is that log2(N) will be a value close to the number of (binary) digits of N, while sqrt(N) will be a number that has itself half the number of digits that N has. Or, to state that with an equality:

log2(N) = 2log2(sqrt(N))

So you need to take the logarithm(!) of sqrt(N) to bring it down to the same order of complexity as log2(N).

For example, for a binary number with 11 digits, 0b10000000000 (=210), the square root is 0b100000, but the logarithm is only 10.

- 317,000

- 35

- 244

- 286

Assuming natural logarithms (otherwise just multiply by a constant), we have

lim {n->inf} log n / sqrt(n) = (inf / inf)

= lim {n->inf} 1/n / 1/(2*sqrt(n)) (by L'Hospital)

= lim {n->inf} 2*sqrt(n)/n

= lim {n->inf} 2/sqrt(n)

= 0 < inf

Refer to https://en.wikipedia.org/wiki/Big_O_notation for alternative defination of O(.) and thereby from above we can say log n = O(sqrt(n)),

Also compare the growth of the functions below, log n is always upper bounded by sqrt(n) for all n > 0.

- 305,947

- 44

- 307

- 483

- 21,482

- 2

- 51

- 63

Just compare the two functions:

sqrt(n) ---------- log(n)

n^(1/2) ---------- log(n)

Plug in Log

log( n^(1/2) ) --- log( log(n) )

(1/2) log(n) ----- log( log(n) )

It is clear that: const . log(n) > log(log(n))

- 450

- 6

- 13

-

for better understanding with visualisations, [plot on WolframAlpha](https://www.wolframalpha.com/input/?i=plot+%7Bsqrt%28x%29%2C+x+sqrt%28x%29%2C+x*log%28x%29%2C+log%28x%29%7D) – shripal mehta Mar 20 '21 at 06:28

No, It's not equivalent.

@trincot gave one excellent explanation with example in his answer. I'm adding one more point. Your professor taught you that

any operation that halves the length of the input has an O(log(n)) complexity

It's also true that,

any operation that reduces the length of the input by 2/3rd, has a O(log3(n)) complexity

any operation that reduces the length of the input by 3/4th, has a O(log4(n)) complexity

any operation that reduces the length of the input by 4/5th, has a O(log5(n)) complexity

So on ...

It's even true for all reduction of lengths of the input by (B-1)/Bth. It then has a complexity of O(logB(n))

N:B: O(logB(n)) means B based logarithm of n

- 1,036

- 1

- 11

- 31

One way to approach the problem can be to compare the rate of growth of O( )

)

and O(  )

)

As n increases we see that (2) is less than (1). When n = 10,000 eq--1 equals 0.005 while eq--2 equals 0.0001

- 368

- 4

- 6

No, they are not equivalent; you can even prove that

O(n**k) > O(log(n, base))

for any k > 0 and base > 1 (k = 1/2 in case of sqrt).

When talking on O(f(n)) we want to investigate the behaviour for large n,

limits is good means for that. Suppose that both big O are equivalent:

O(n**k) = O(log(n, base))

which means there's a some finite constant C such that

O(n**k) <= C * O(log(n, base))

starting from some large enough n; put it in other terms (log(n, base) is not 0 starting from large n, both functions are continuously differentiable):

lim(n**k/log(n, base)) = C

n->+inf

To find out the limit's value we can use L'Hospital's Rule, i.e. take derivatives for numerator and denominator and divide them:

lim(n**k/log(n)) =

lim([k*n**(k-1)]/[ln(base)/n]) =

ln(base) * k * lim(n**k) = +infinity

so we can conclude that there's no constant C such that O(n**k) < C*log(n, base) or in other words

O(n**k) > O(log(n, base))

- 180,369

- 20

- 160

- 215

-

So are you positing that if matrix multiplication can be done in O(n^2 log n) time, then omega w=2? As I can see its a sort of contradiction in such case. You could say w=2.00000000000001 even but it's not 2. Interesting when a problem is expressed in terms of n^k when it's solution could be n^(k-1)log n – Gregory Morse Aug 17 '22 at 22:27

No, it isn't. When we are dealing with time complexity, we think of input as a very large number. So let's take n = 2^18. Now for sqrt(n) number of operation will be 2^9 and for log(n) it will be equal to 18 (we consider log with base 2 here). Clearly 2^9 much much greater than 18. So, we can say that O(log n) is smaller than O(sqrt n).

- 83

- 1

- 3

- 9

To prove that sqrt(n) grows faster than lgn(base2) you can take the limit of the 2nd over the 1st and proves it approaches 0 as n approaches infinity.

lim(n—>inf) of (lgn/sqrt(n))

Applying L’Hopitals Rule:

= lim(n—>inf) of (2/(sqrt(n)*ln2))

Since sqrt(n) and ln2 will increase infinitely as n increases, and 2 is a constant, this proves

lim(n—>inf) of (2/(sqrt(n)*ln2)) = 0