Ok, so my current curve fitting code has a step that uses scipy.stats to determine the right distribution based on the data,

distributions = [st.laplace, st.norm, st.expon, st.dweibull, st.invweibull, st.lognorm, st.uniform]

mles = []

for distribution in distributions:

pars = distribution.fit(data)

mle = distribution.nnlf(pars, data)

mles.append(mle)

results = [(distribution.name, mle) for distribution, mle in zip(distributions, mles)]

for dist in sorted(zip(distributions, mles), key=lambda d: d[1]):

print dist

best_fit = sorted(zip(distributions, mles), key=lambda d: d[1])[0]

print 'Best fit reached using {}, MLE value: {}'.format(best_fit[0].name, best_fit[1])

print [mod[0].name for mod in sorted(zip(distributions, mles), key=lambda d: d[1])]

Where data is a list of numeric values. This is working great so far for fitting unimodal distributions, confirmed in a script that randomly generates values from random distributions and uses curve_fit to redetermine the parameters.

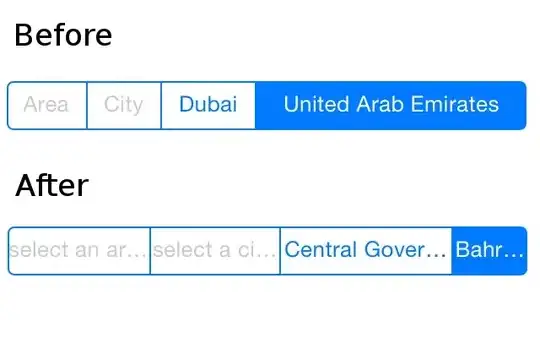

Now I would like to make the code able to handle bimodal distributions, like the example below:

Is it possible to get a MLE for a pair of models from scipy.stats in order to determine if a particular pair of distributions are a good fit for the data?, something like

distributions = [st.laplace, st.norm, st.expon, st.dweibull, st.invweibull, st.lognorm, st.uniform]

distributionPairs = [[modelA.name, modelB.name] for modelA in distributions for modelB in distributions]

and use those pairs to get an MLE value of that pair of distributions fitting the data?