I am following the Apache Map Reduce tutorial and I am at the point of assigning input and output directories. I created both directories here:

~/projects/hadoop/WordCount/input/

~/projects/hadoop/WordCount/output/

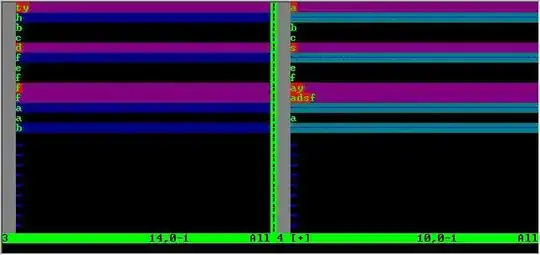

but when I run fs, the file and directory are not found. I am running as ubuntu user and it owns the directories and the input file.

Based on a proposed solution below, I then tried:

Found my hdfs directory hdfs dfs -ls / which is /tmp

I created input/ and output/ inside /tmp with mkdir

Tried to copy local .jar to.hdfs:

hadoop fs -copyFromLocal ~projects/hadoop/WordCount/wc.jar /tmp

Received:

copyFromLocal: `~projects/hadoop/WordCount/wc.jar': No such file or directory

Any troubleshooting ideas? Thanks