I have a question considering Geoffrey Hinton's proof of convergence of the perceptron algorithm: Lecture Slides.

On slide 23 it says:

Every time the perceptron makes a mistake, the squared distance to all of these generously feasible weight vectors is always decreased by at least the squared length of the update vector.

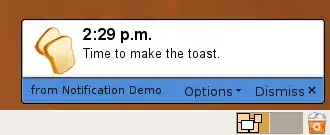

My problem is that I can make the distance reduction arbitrarily small by moving the feasible vector to the right. See here for a depiction:

So how can the distance be guaranteed to shrink by the squared length of the update vector (in blue), if I can make it arbitrarily small?