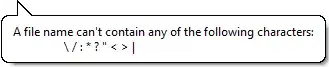

I'm trying to fit a sum of gaussians using scikit-learn because the scikit-learn GaussianMixture seems much more robust than using curve_fit.

Problem: It doesn't do a great job in fitting a truncated part of even a single gaussian peak:

from sklearn import mixture

import matplotlib.pyplot

import matplotlib.mlab

import numpy as np

clf = mixture.GaussianMixture(n_components=1, covariance_type='full')

data = np.random.randn(10000)

data = [[x] for x in data]

clf.fit(data)

data = [item for sublist in data for item in sublist]

rangeMin = int(np.floor(np.min(data)))

rangeMax = int(np.ceil(np.max(data)))

h = matplotlib.pyplot.hist(data, range=(rangeMin, rangeMax), normed=True);

plt.plot(np.linspace(rangeMin, rangeMax),

mlab.normpdf(np.linspace(rangeMin, rangeMax),

clf.means_, np.sqrt(clf.covariances_[0]))[0])

gives

now changing

now changing data = [[x] for x in data] to data = [[x] for x in data if x <0] in order to truncate the distribution returns

Any ideas how to get the truncation fitted properly?

Any ideas how to get the truncation fitted properly?

Note: The distribution isn't necessarily truncated in the middle, there could be anything between 50% and 100% of the full distribution left.

I would also be happy if anyone can point me to alternative packages. I've only tried curve_fit but couldn't get it to do anything useful as soon as more than two peaks are involved.