I need to run my GPU Kernel (ALEA Library) 100 times with the same data using one integer (0-99) as the parameter. I tried to implement this loop in the Kernel but I got strange results. I had to take the loop out of the kernel and around the GPULaunch function like this :

var lp = new LaunchParam(GridDim, BlockDim);

for (int i= 0; i < 100; i++)

{

GPULaunch(TestKernel, lp, Data, i);

}

The CPU version of the code is highly optimized and efficiently uses 4 cores (%100). After reorganizing the data in the memory according to coalesced memory access principles, I could have %92 Occupancy and %96 Global Load Efficiency. But still, the GPU version is only %50 faster than the CPU version. I have doubts whether looping GPULaunch is effective this way.

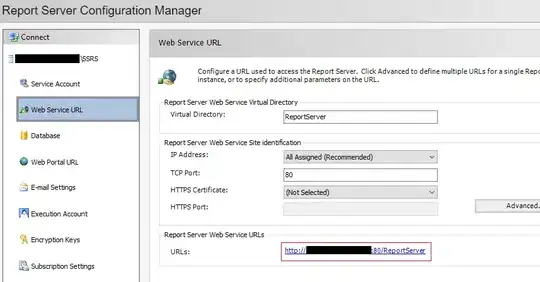

As you see in the graph below, I don't see repetitive memory transfers in NVIDIA Visual Profilier. Once I loaded the data to GPU (Not seen in the graph but not important for me), I get one short memory transfer of the output of 100 loops as seen at the right end. So my question is :

- Does this method of calling GPULaunch in a loop have an unseen memory transfer of the same data?

- If there is such an overhead, I need to have this loop in the Kernel. How can I do it. I tried but got unstable results, thinking that this method doesn't fit into GPU Parallel Programming Architecture.

Thanks in advance