I have a following program in Haskell:

processDate :: String -> IO ()

processDate date = do

...

let newFlattenedPropertiesWithPrice = filter (notYetInserted date existingProperties) flattenedPropertiesWithPrice

geocodedProperties <- propertiesWithGeocoding newFlattenedPropertiesWithPrice

propertiesWithGeocoding :: [ParsedProperty] -> IO [(ParsedProperty, Maybe LatLng)]

propertiesWithGeocoding properties = do

let addresses = fmap location properties

let batchAddresses = chunksOf 100 addresses

batchGeocodedLocations <- mapM geocodeAddresses batchAddresses

let geocodedLocations = fromJust $ concat <$> sequence batchGeocodedLocations

return (zip properties geocodedLocations)

geocodeAddresses :: [String] -> IO (Maybe [Maybe LatLng])

geocodeAddresses addresses = do

mapQuestKey <- getEnv "MAP_QUEST_KEY"

geocodeResponse <- openURL $ mapQuestUrl mapQuestKey addresses

return $ geocodeResponseToResults geocodeResponse

geocodeResponseToResults :: String -> Maybe [Maybe LatLng]

geocodeResponseToResults inputResponse =

latLangs

where

decodedResponse :: Maybe GeocodingResponse

decodedResponse = decodeGeocodingResponse inputResponse

latLangs = fmap (fmap geocodingResultToLatLng . results) decodedResponse

decodeGeocodingResponse :: String -> Maybe GeocodingResponse

decodeGeocodingResponse inputResponse = Data.Aeson.decode (fromString inputResponse) :: Maybe GeocodingResponse

It reads a list of properties (homes and apartments) from html files, parses them, geocodes the addresses and saves the results into sqlite db.

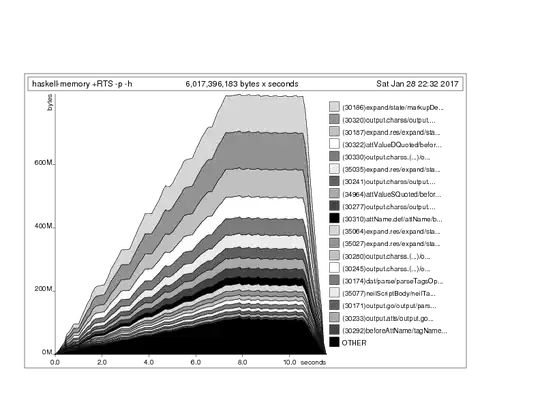

Everything works fine except for a very high memory usage (around 800M).

By commenting code out I have pinpointed the problem to be the geocoding step.

I send 100 addresses at a time to MapQuest api (https://developer.mapquest.com/documentation/geocoding-api/batch/get/).

The response for 100 addresses is quite massive so it might be one of the culprits, but 800M? I feel like it holds to all of the results until the end which drives the memory usage so high.

After commenting out the geocoding part of the program memory usage is around 30M which is fine.

You can get the full version which reproduces the issue here: https://github.com/Leonti/haskell-memory-so

I'm quite a newbie in Haskell, so not sure how I can optimize it.

Any ideas?

Cheers!