I create in pentaho server a form using CDE. This form is a table with some input fields. On button click is generate an array which is send as parameter value. IN db table i have 3 columns: alfa, beta, gamma.

//var data = JSON.stringify(array);

var data = [

{"alfa":"some txt","beta":"another text","gamma": 23},

{"alfa":"stxt","beta":"anoxt","gamma": 43}

]

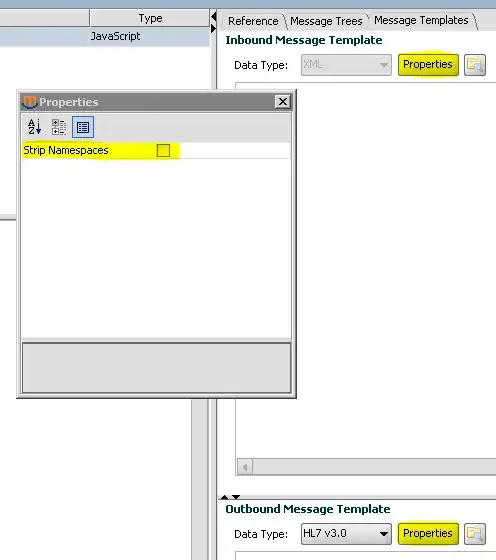

I create a kettle transformation which is run as expected. This 2 rows of array are inserted in database, but when i run same kettle transformation using CDA kettle over kettleTransFromFile in Pentaho, only first row is inserted. This is my transformation:

- Get Variable: data(string)

Modified Java Script Value: data_decode contain the json array

var data_decode = eval(data.toString());

JSON Input : alfa - $..[0].alpha, beta - $..[0].beta, gamma -$..[0].gamma

- tableinsert - insert in database.

... From spoon, kettle command line all are OK, but not from Pentaho. What is wrong?

Thank you! Geo

UPDATE

Maybe it's miss configuration or a bug or a feauture, but i don't use this method. I find a simple method: i create a scriptable over scripting datasource with a simple java code inside (using beanshell). Now it's work as expected. I'll move this form inside a Sparkl plugin. Thank you.

This question, still remains open, maybe somebody want to try this approach.