The function you have is fine. But the sizes of X and theta are incompatible. In general, if size(X) is [N, M], then size(theta) should be [M, 1].

So I would suggest replacing the line

theta = zeros(2, 1);

with

theta = zeros(size(X, 2), 1);

should have as many columns as theta has elements. So in this example, size(X) should be [133, 2].

Also, you should move that initialization before you call the function.

For example, the following code does not return NaN if you remove the initialization of theta from the function.

X = rand(133, 1); % or rand(133, 2)

y = rand(133, 1);

theta = zeros(size(X, 2), 1); % initialize fitting parameters

% run gradient descent

theta = gradientDescent(X, y, theta, 0.1, 1500)

EDIT: This is in response to comments below.

Your problem is due to the gradient descent algorithm not converging. To see it yourself, plot J_history, which should never increase if the algorithm is stable. You can compute J_history by inserting the following line inside the for-loop in the function gradientDescent:

J_history(iter) = mean((X * theta - y).^2);

In your case (i.e. given data file and alpha = 0.01), J_history increases exponentially. This is shown in the plot below. Note that the y-axis is in logarithmic scale.

This is a clear sign of instability in gradient descent.

There are two ways to eliminate this problem.

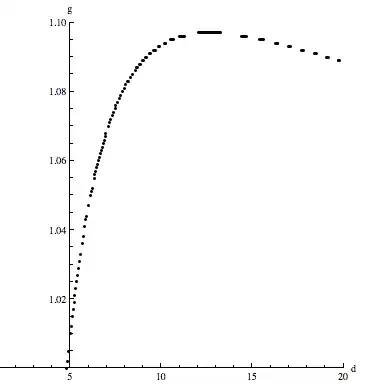

Option 1. Use smaller alpha. alpha controls the rate of gradient descent. If it is too large, the algorithm is unstable. If it is too small, the algorithm takes a long time to reach the optimal solution. Try something like alpha = 1e-8 and go from there. For example, alpha = 1e-8 results in the following cost function:

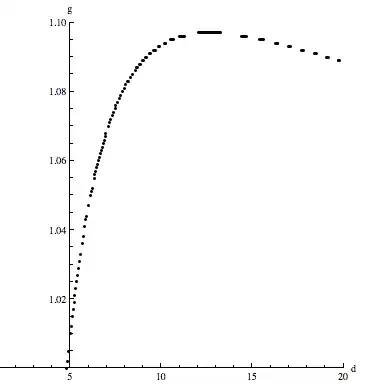

Option 2. Use feature scaling to reduce the magnitude of the inputs. One way of doing this is called Standarization. The following is an example of using standarization and the resulting cost function:

data=xlsread('v & t.xlsx');

data(:,1) = (data(:,1)-mean(data(:,1)))/std(data(:,1));