I am trying to fit a second order function with a zero intercept. Right now when I plot it, I am getting a line with a y-int > 0. I am trying to fit to a set of points output by the function:

y**2 = 14.29566 * np.pi * x

or

y = np.sqrt(14.29566 * np.pi * x)

to two data sets x and y, with D = 3.57391553. My fitting routine is:

z = np.polyfit(x,y,2) # Generate curve coefficients

p = np.poly1d(z) # Generate curve function

xp = np.linspace(0, catalog.tlimit, 10) # generate input values

plt.scatter(x,y)

plt.plot(xp, p(xp), '-')

plt.show()

I have also tried using statsmodels.ols:

mod_ols = sm.OLS(y,x)

res_ols = mod_ols.fit()

but I don't understand how to generate coefficients for a second order function as opposed to a linear function, nor how to set the y-int to 0. I saw another similar post dealing with forcing a y-int of 0 with a linear fit, but I couldn't figure out how to do that with a second order function.

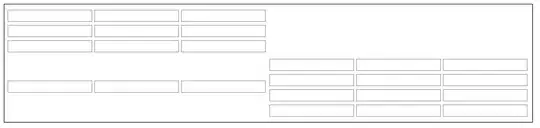

Plot right now:

Data:

x = [0., 0.00325492, 0.00650985, 0.00976477, 0.01301969, 0.01627462, 0.01952954, 0.02278447,

0.02603939, 0.02929431, 0.03254924, 0.03580416, 0.03905908, 0.04231401]

y = [0., 0.38233801, 0.5407076, 0.66222886, 0.76467602, 0.85493378, 0.93653303, 1.01157129,

1.0814152, 1.14701403, 1.20905895, 1.26807172, 1.32445772, 1.3785393]