I've read on wikipedia https://en.wikipedia.org/wiki/Q-learning

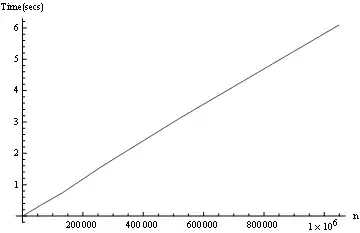

Q-learning may suffer from slow rate of convergence, especially when the discount factor {\displaystyle \gamma } \gamma is close to one.[16] Speedy Q-learning, a new variant of Q-learning algorithm, deals with this problem and achieves a slightly better rate of convergence than model-based methods such as value iteration

So I wanted to try speedy q-learning, and see how better it is.

The only source about it I could find on the internet is this: https://papers.nips.cc/paper/4251-speedy-q-learning.pdf

That's the algorithm they suggest.

Now, I didn't understand it. what excactly is TkQk, Am I supposed to have another list of q-values? Is there any clearer explanation than this?

Q[previousState][action] = ((Q[previousState][action]+(learningRate * ( reward + discountFactor * maxNextExpectedReward - Q[previousState][action]) )));

this is my current QLearning algorithm, I want to replace it to speedy Q-learning.