I'm building a web app that uses EvaporateJS to upload large files to Amazon S3 using Multipart Uploads. I noticed an issue where every time a new chunk was started the browser would freeze for ~2 seconds. I want the user to be able to continue to use my app while the upload is in progress, and this freezing makes that a bad experience.

I used Chrome's Timeline to look into what was causing this and found that it was SparkMD5's hashing. So I've moved the entire upload process into a Worker, which I thought would fix the issue.

Well the issue is now fixed in Edge and Firefox, but Chrome still has the exact same problem.

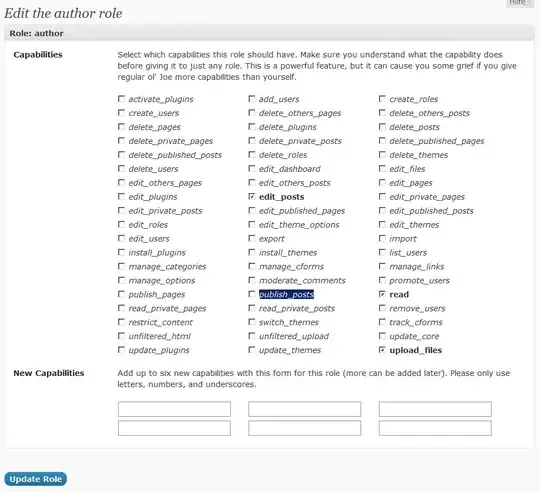

Here's a screenshot of my Timeline:

As you can see, during the freezes my main thread is doing basically nothing, with <8ms of JavaScript running during that time. All the work is occurring in my Worker thread, and even that is only running for ~600ms or so, not the 1386ms that my frame takes.

I'm really not sure what's causing the issue, are there any gotchas with Workers that I should be aware of?

Here's the code for my Worker:

var window = self; // For Worker-unaware scripts

// Shim to make Evaporate work in a Worker

var document = {

createElement: function() {

var href = undefined;

var elm = {

set href(url) {

var obj = new URL(url);

elm.protocol = obj.protocol;

elm.hostname = obj.hostname;

elm.pathname = obj.pathname;

elm.port = obj.port;

elm.search = obj.search;

elm.hash = obj.hash;

elm.host = obj.host;

href = url;

},

get href() {

return href;

},

protocol: undefined,

hostname: undefined,

pathname: undefined,

port: undefined,

search: undefined,

hash: undefined,

host: undefined

};

return elm;

}

};

importScripts("/lib/sha256/sha256.min.js");

importScripts("/lib/spark-md5/spark-md5.min.js");

importScripts("/lib/url-parse/url-parse.js");

importScripts("/lib/xmldom/xmldom.js");

importScripts("/lib/evaporate/evaporate.js");

DOMParser = self.xmldom.DOMParser;

var defaultConfig = {

computeContentMd5: true,

cryptoMd5Method: function (data) { return btoa(SparkMD5.ArrayBuffer.hash(data, true)); },

cryptoHexEncodedHash256: sha256,

awsSignatureVersion: "4",

awsRegion: undefined,

aws_url: "https://s3-ap-southeast-2.amazonaws.com",

aws_key: undefined,

customAuthMethod: function(signParams, signHeaders, stringToSign, timestamp, awsRequest) {

return new Promise(function(resolve, reject) {

var signingRequestId = currentSigningRequestId++;

postMessage(["signingRequest", signingRequestId, signParams.videoId, timestamp, awsRequest.signer.canonicalRequest()]);

queuedSigningRequests[signingRequestId] = function(signature) {

queuedSigningRequests[signingRequestId] = undefined;

if(signature) {

resolve(signature);

} else {

reject();

}

}

});

},

//logging: false,

bucket: undefined,

allowS3ExistenceOptimization: false,

maxConcurrentParts: 5

}

var currentSigningRequestId = 0;

var queuedSigningRequests = [];

var e = undefined;

var filekey = undefined;

onmessage = function(e) {

var messageType = e.data[0];

switch(messageType) {

case "init":

var globalConfig = {};

for(var k in defaultConfig) {

globalConfig[k] = defaultConfig[k];

}

for(var k in e.data[1]) {

globalConfig[k] = e.data[1][k];

}

var uploadConfig = e.data[2];

Evaporate.create(globalConfig).then(function(evaporate) {

var e = evaporate;

filekey = globalConfig.bucket + "/" + uploadConfig.name;

uploadConfig.progress = function(p, stats) {

postMessage(["progress", p, stats]);

};

uploadConfig.complete = function(xhr, awsObjectKey, stats) {

postMessage(["complete", xhr, awsObjectKey, stats]);

}

uploadConfig.info = function(msg) {

postMessage(["info", msg]);

}

uploadConfig.warn = function(msg) {

postMessage(["warn", msg]);

}

uploadConfig.error = function(msg) {

postMessage(["error", msg]);

}

e.add(uploadConfig);

});

break;

case "pause":

e.pause(filekey);

break;

case "resume":

e.resume(filekey);

break;

case "cancel":

e.cancel(filekey);

break;

case "signature":

var signingRequestId = e.data[1];

var signature = e.data[2];

queuedSigningRequests[signingRequestId](signature);

break;

}

}

Note that it relies on the calling thread to provide it with the AWS Public Key, AWS Bucket Name and AWS Region, AWS Object Key and the input File object, which are all provided in the 'init' message. When it needs something signed, it sends a 'signingRequest' message to the parent thread, which is expected to provided the signature in a 'signature' message once it's been fetched from my API's signing endpoint.