The math on RNGs is solid. These days most popular implementations are too. As such, your conjecture of

is generated by a formula, therefore they are correlated.

is incorrect.

But if you really truly deeply think that way, there is an out: hardware random number generators. The site at random.org has been providing hardware RNG draws "as a service" for a long time. Here is an example (in R, which I use more, but there is an official Python client):

R> library(random)

R> randomNumbers(min=1, max=20000) # your range, default number

V1 V2 V3 V4 V5

[1,] 532 19452 5203 13646 5462

[2,] 4611 10814 3694 12731 566

[3,] 11884 19897 1601 10652 791

[4,] 17427 9524 7522 1051 9432

[5,] 5426 5079 2232 2517 4883

[6,] 13807 9194 19980 1706 9205

[7,] 13043 16250 12827 2161 10789

[8,] 7060 6008 9110 8388 1102

[9,] 12042 19342 2001 17780 3100

[10,] 11690 4986 4389 14187 17191

[11,] 19574 13615 3129 17176 5590

[12,] 11104 5361 8000 5260 343

[13,] 7518 7484 7359 16840 12213

[14,] 14914 1991 19952 10127 14981

[15,] 13528 18602 10182 1075 16480

[16,] 9631 17160 19808 11662 10514

[17,] 4827 13960 17003 864 11159

[18,] 8939 7095 16102 19836 15490

[19,] 8321 6007 1787 6113 17948

[20,] 9751 7060 8355 19065 15180

R>

Edit: The OP seems unconvinced, so there is a quick reproducible simulation (again, in R because that is what I use):

R> set.seed(42) # set seed for RNG

R> mean(replicate(10, cor(runif(100), runif(100))))

[1] -0.0358398

R> mean(replicate(100, cor(runif(100), runif(100))))

[1] 0.0191165

R> mean(replicate(1000, cor(runif(100), runif(100))))

[1] -0.00117392

R>

So you see that as we go from 10 to 100 to 1000 replications of just 100 U(0,1), the correlations estimate goes to zero.

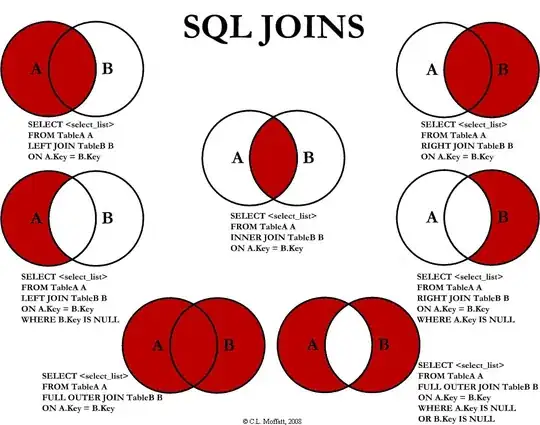

We can make this a little nice with a plot, recovering the same data and some more:

R> set.seed(42)

R> x <- 10^(1:5) # powers of ten from 1 to 5, driving 10^1 to 10^5 sims

R> y <- sapply(x, function(n) mean(replicate(n, cor(runif(100), runif(100)))))

R> y # same first numbers as seed reset to same start

[1] -0.035839756 0.019116460 -0.001173916 -0.000588006 -0.000290494

R> plot(x, y, type='b', main="Illustration of convergence towards zero", log="x")

R> abline(h=0, col="grey", lty="dotted")