I have a Flask project running with a subprocess call to a Scrapy spider:

class Utilities(object):

@staticmethod

def scrape(inputs):

job_id = str(uuid.uuid4())

project_folder = os.path.abspath(os.path.dirname(__file__))

subprocess.call(['scrapy', 'crawl', "ExampleCrawler", "-a", "inputs=" + str(inputs), "-s", "JOB_ID=" + job_id],

cwd="%s/scraper" % project_folder)

return job_id

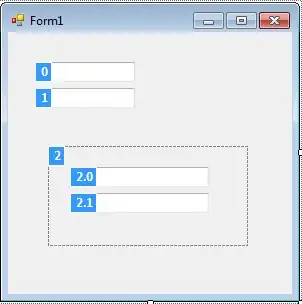

Even though I have 'Attach to subprocess automatically while debugging' enabled in the projects' Python debugger, the breakpoints inside the spider won't work. The first breakpoint which works again would be one on return job_id.

Here is a part of the code from the spider where I expect the breakpoints to work:

from scrapy.http import FormRequest

from scrapy.spiders import Spider

from scrapy.loader import ItemLoader

from Handelsregister_Scraper.scraper.items import Product

import re

class ExampleCrawler(Spider):

name = "ExampleCrawler"

def __init__(self, inputs='', *args, **kwargs):

super(ExampleCrawler, self).__init__(*args, **kwargs)

self.start_urls = ['https://www.example-link.com']

self.inputs = inputs

def parse(self, response):

yield FormRequest(self.start_urls[0], callback=self.parse_list_elements, formdata=self.inputs)

I can't find any solution to this other than enabling said option which i did.

Any suggestions how to get breakpoints inside the spider work?