I have a machine learning problem that I'm trying to solve. I'm using a Gaussian HMM (from hmmlearn) with 5 states, modelling extreme negative, negative, neutral, positive and extreme positive in the sequence. I have set up the model in the gist below

https://gist.github.com/stevenwong/cb539efb3f5a84c8d721378940fa6c4c

import numpy as np

import pandas as pd

from hmmlearn.hmm import GaussianHMM

x = pd.read_csv('data.csv')

x = np.atleast_2d(x.values)

h = GaussianHMM(n_components=5, n_iter=10, verbose=True, covariance_type="full")

h = h.fit(x)

y = h.predict(x)

The problem is that most of the estimated states converges to the middle, even when I can visibly see that there are spades of positive values and spades of negative values but they are all lumped together. Any idea how I can get it to better fit the data?

EDIT 1:

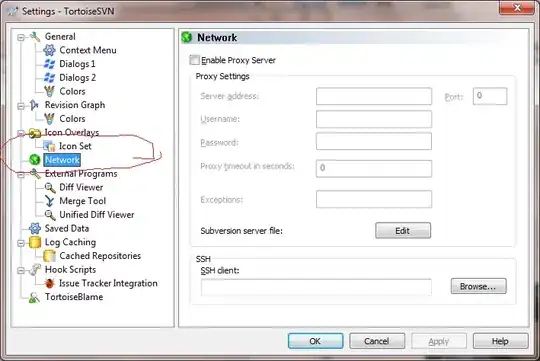

Here is the transition matrix. I believe the way it's read in hmmlearn is across the row (i.e., row[0] means prob of transiting to itself, state 1, 2, 3...)

In [3]: h.transmat_

Out[3]:

array([[ 0.19077231, 0.11117929, 0.24660208, 0.20051377, 0.25093255],

[ 0.12289066, 0.17658589, 0.24874935, 0.24655888, 0.20521522],

[ 0.15713787, 0.13912972, 0.25004413, 0.22287976, 0.23080852],

[ 0.14199694, 0.15423031, 0.25024992, 0.2332739 , 0.22024893],

[ 0.17321093, 0.12500688, 0.24880728, 0.21205912, 0.2409158 ]])

If I set all the transition probs to 0.2, it looks like this (if I do average by state the separation is worse).