I am using the Jsoup.jar to get the keywords from the meta tag of several websites using MapReduce. The list of websites is being kept within a txt file. However when I compile the java file in terminal, it says that package org.jsoup.Jsoup does not exist. I made sure that the jar is in the same folder as that of the java file.

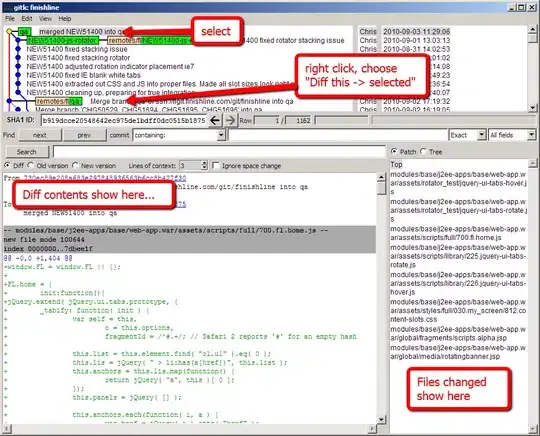

Screenshot of error: