Applying an LSH algorithm in Spark 1.4 (https://github.com/soundcloud/cosine-lsh-join-spark/tree/master/src/main/scala/com/soundcloud/lsh), I process a text file (4GB) in a LIBSVM format (https://www.csie.ntu.edu.tw/~cjlin/libsvm/) to find duplicates. First, I have run my scala script in a server using only one executor with 36 cores. I retrieved my results in 1,5 hrs.

In order to get my results much faster, I tried to run my code in a hadoop cluster via yarn in an hpc with 3 nodes where each node has 20 cores and 64 gb memory. Since I am not experienced much running codes in hpc, I have followed the suggestions given here: https://blog.cloudera.com/blog/2015/03/how-to-tune-your-apache-spark-jobs-part-2/

As a result, I have submitted spark as below:

spark-submit --class com.soundcloud.lsh.MainCerebro --master yarn-cluster --num-executors 11 --executor-memory 19G --executor-cores 5 --driver-memory 2g cosine-lsh_yarn.jar

As I understood, I have assigned 3 executors per node and 19 gb for each executor.

However, I could not get my results even though more than 2 hours passed.

My spark configuration is:

val conf = new SparkConf()

.setAppName("LSH-Cosine")

.setMaster("yarn-cluster")

.set("spark.driver.maxResultSize", "0");

How can I dig this issue? From where should I start to improve calculation time?

EDIT:

1)

I have noticed that coalesce is way much slower in yarn

entries.coalesce(1, true).saveAsTextFile(text_string)

2)

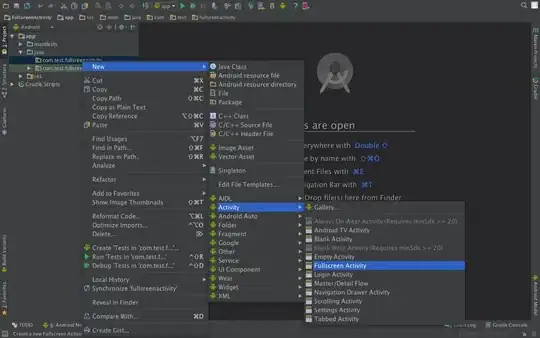

EXECUTORS AND STAGES FROM HPC:

EXECUTORS AND STAGES FROM SERVER: