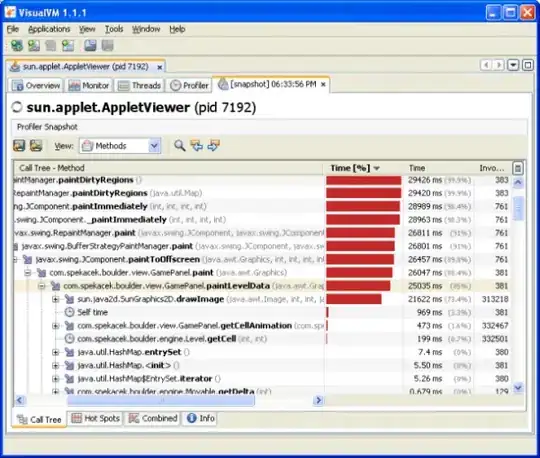

If I use make -j2, it builds fine, but under-utilizes CPU:

If I use make -j4, it builds fast, but for some particular template-heavy files it consumes a lot of memory, slowing down entire system and build process as well due to swapping out to HDD:

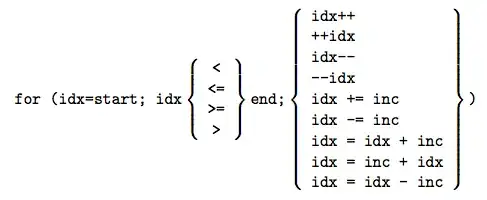

How do I make it automatically limit number of parallel tasks based on memory, like this:

, so that it builds the project at maximum rate, but slows down in some places to avoid hitting memory wall?

Ideas:

- Use

-land artificially adjust load average if memory is busy (load average grows naturally when system is already in trouble). - Makes memory allocation syscalls (like sbrk(2) or mmap(2)) or page faults keep process hanged until memory gets reclaimed by finished jobs instead of swapping out other processes. Deadlock-prone unfortunately...