I am fetching following details of columns from SQL Server:

Size

Precision

Scale

But I have noticed that in case of int I am getting 10 as precision but when i did some research, I couldn't find any such thing related to int, and that int datatype have precision of 10 or in SQL Server Management Studio.

So I don't know how 10 is coming as precision in case of int data type.

Table:

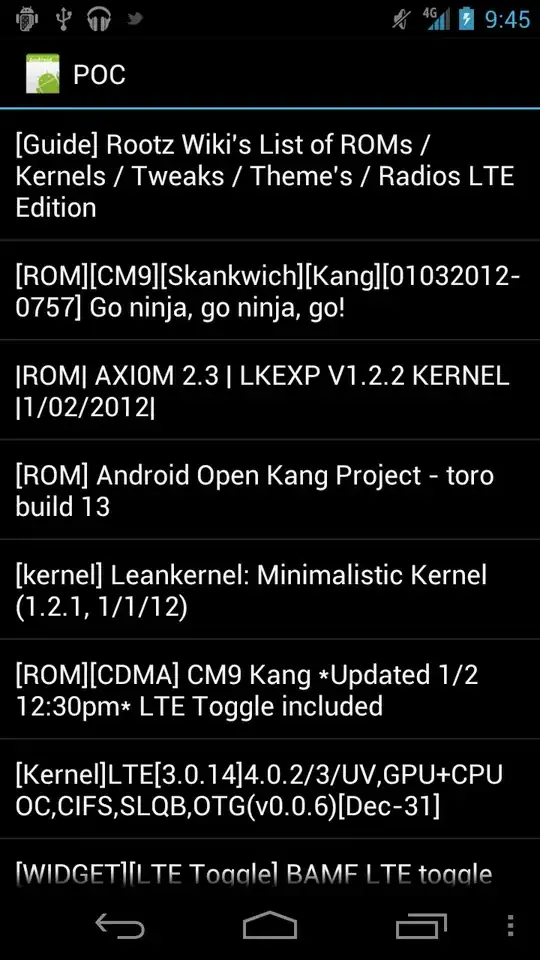

Screenshot:

Code:

String[] columnRestrictions = new String[4];

columnRestrictions[0] = 'MyDb';

columnRestrictions[1] = 'dbo';

columnRestrictions[2] = 'Employee';

using (SqlConnection con = new SqlConnection("MyConnectionString"))

{

con.Open();

var columns = con.GetSchema("Columns", columnRestrictions).AsEnumerable()

.Select

(

t => new

{

Name = t[3].ToString(),

Datatype = t.Field<string>("DATA_TYPE"),

IsNullable = t.Field<string>("is_nullable"),

Size = t.Field<Int32?>("character_maximum_length"),

NumericPrecision = t.Field<int?>("NUMERIC_PRECISION"),

NumericScale = t.Field<Int32?>("NUMERIC_SCALE")

}).ToList();