I am starting with the generic TensorFlow example.

To classify my data I need to use multiple labels (ideally multiple softmax classifiers) on the final layer, because my data carries multiple independent labels (sum of probabilities is not 1).

Specifically in the retrain.py these lines in add_final_training_ops() add the final tensor

final_tensor = tf.nn.softmax(logits, name=final_tensor_name)

and here

cross_entropy = tf.nn.softmax_cross_entropy_with_logits(

logits, ground_truth_input)

Is there already a generic classifier in TensorFlow? If not, how to achieve multilevel classification?

add_final_training_ops() from tensorflow/examples/image_retraining/retrain.py:

def add_final_training_ops(class_count, final_tensor_name, bottleneck_tensor):

with tf.name_scope('input'):

bottleneck_input = tf.placeholder_with_default(

bottleneck_tensor, shape=[None, BOTTLENECK_TENSOR_SIZE],

name='BottleneckInputPlaceholder')

ground_truth_input = tf.placeholder(tf.float32,

[None, class_count],

name='GroundTruthInput')

layer_name = 'final_training_ops'

with tf.name_scope(layer_name):

with tf.name_scope('weights'):

layer_weights = tf.Variable(tf.truncated_normal([BOTTLENECK_TENSOR_SIZE, class_count], stddev=0.001), name='final_weights')

variable_summaries(layer_weights)

with tf.name_scope('biases'):

layer_biases = tf.Variable(tf.zeros([class_count]), name='final_biases')

variable_summaries(layer_biases)

with tf.name_scope('Wx_plus_b'):

logits = tf.matmul(bottleneck_input, layer_weights) + layer_biases

tf.summary.histogram('pre_activations', logits)

final_tensor = tf.nn.softmax(logits, name=final_tensor_name)

tf.summary.histogram('activations', final_tensor)

with tf.name_scope('cross_entropy'):

cross_entropy = tf.nn.softmax_cross_entropy_with_logits(

logits, ground_truth_input)

with tf.name_scope('total'):

cross_entropy_mean = tf.reduce_mean(cross_entropy)

tf.summary.scalar('cross_entropy', cross_entropy_mean)

with tf.name_scope('train'):

train_step = tf.train.GradientDescentOptimizer(FLAGS.learning_rate).minimize(

cross_entropy_mean)

return (train_step, cross_entropy_mean, bottleneck_input, ground_truth_input,

final_tensor)

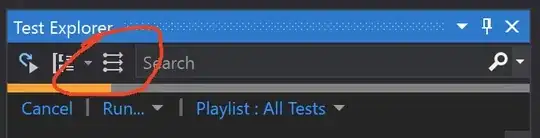

Even after adding the sigmoid classifier and retraining, Tensorboard still shows softmax: