I'm looking for an efficient way of detecting deleted records in production and updating the data warehouse to reflect those deletes because the table is > 12M rows and contains transactional data used for accounting purposes.

Originally, everything was done in a stored procedure by somebody before me and I've been tasked with moving the process to SSIS.

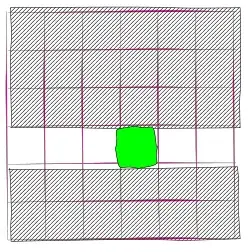

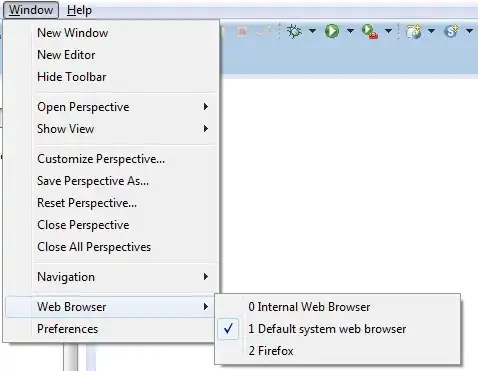

Here is what my test pattern looks like so far:

Inside the Data Flow Task:

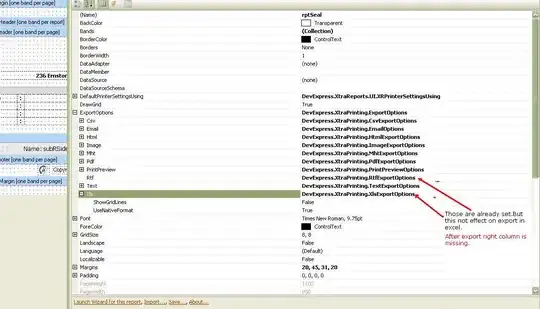

I'm using MD5 hashes to speed up the ETL process as demonstrated in this article.

This should give a huge speed boost to the process by not having to store so many rows in memory for comparison purposes and by removing the bulk of conditional split processing at the same time.

But the issue is it doesn't account for records that are deleted in production.

How should I go about doing this? It may be simple to you but I'm new to SSIS so I'm not sure how to ask correctly.

Thank you in advance.