The image shows 8081 UI. The master shows running application when I start a scala shell or pyspark shell. But when I use spark-submit to run a python script, master doesn't show any running application. This is the command I used: spark-submit --master spark://localhost:7077 sample_map.py. The web UI is at :4040. I want to know if I'm doing it the right way for submitting scripts or if spark-submit never really shows running application.

localhost:8080 or <master_ip>:8080 doesn't open for me but <master_ip>:8081 opens. It shows the executor info.

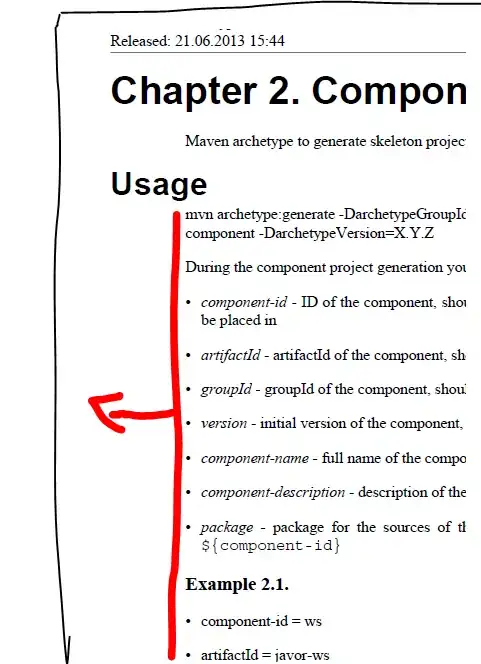

These are my configurations in spark-env.sh:

export SPARK_EXECUTOR_MEMORY=512m

export SPARK_MASTER_WEBUI_PORT=4040

export SPARK_WORKER_CORES=2

export SPARK_WORKER_MEMORY=1g

export SPARK_WORKER_INSTANCES=2

export SPARK_WORKER_DIR=/opt/worker

export SPARK_DAEMON_MEMORY=512m

export SPARK_LOCAL_DIRS=/tmp/spark

export SPARK_MASTER_IP 'splunk_dep'

I'm using CentOS , python 2.7 and spark-2.0.2-bin-hadoop2.7.