Using ml, Spark 2.0 (Python) and a 1.2 million row dataset, I am trying to create a model that predicts purchase tendency with a Random Forest Classifier. However when applying the transformation to the splitted test dataset the prediction is always 0.

The dataset looks like:

[Row(tier_buyer=u'0', N1=u'1', N2=u'0.72', N3=u'35.0', N4=u'65.81', N5=u'30.67', N6=u'0.0'....

tier_buyer is the field used as a label indexer. The rest of the fields contain numeric data.

Steps

1.- Load the parquet file, and fill possible null values:

parquet = spark.read.parquet('path_to_parquet')

parquet.createOrReplaceTempView("parquet")

dfraw = spark.sql("SELECT * FROM parquet").dropDuplicates()

df = dfraw.na.fill(0)

2.- Create features vector:

features = VectorAssembler(

inputCols = ['N1','N2'...],

outputCol = 'features')

3.- Create string indexer:

label_indexer = StringIndexer(inputCol = 'tier_buyer', outputCol = 'label')

4.- Split the train and test datasets:

(train, test) = df.randomSplit([0.7, 0.3])

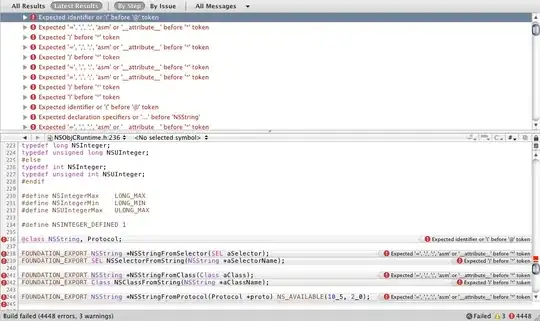

Resulting train dataset

Resulting Test dataset

5.- Define the classifier:

classifier = RandomForestClassifier(labelCol = 'label', featuresCol = 'features')

6.- Pipeline the stages and fit the train model:

pipeline = Pipeline(stages=[features, label_indexer, classifier])

model = pipeline.fit(train)

7.- Transform the test dataset:

predictions = model.transform(test)

8.- Output the test result, grouped by prediction:

predictions.select("prediction", "label", "features").groupBy("prediction").count().show()

As you can see, the outcome is always 0. I have tried with multiple feature variations in hopes of reducing the noise, also trying from different sources and infering the schema, with no luck and the same results.

Questions

- Is the current setup, as described above, correct?

- Could the

nullvalue filling on the originalDataframebe source of failure to effectively perform the prediction? In the screenshot shown above it looks like some features are in the form of a(They are representation of Dense and Sparse Vectors)tupleand other of alist, why? I'm guessing this could be a possible source of error.