I like to optimize (minimize) the following given function (quad_function) by using Optim.jl with automatic differentiation (autodiff=true).

My objective function rounds Real values to whole numbers and is therefore step-like.

As I use the autodiff option, my Real values get dual numbers (ForwardDiff.Duals). But unfortunately there is no round function implemented for the ForwardDiff.Dual type. Thus I have written a roundtoint64 function, which extracts the real part. This approach causes problems during the optimization.

using Plots

plotlyjs()

function roundtoint64(x)

if typeof(x) <: ForwardDiff.Dual

roundtoint64(x.value)

else

Int64(round(x))

end

end

function quad_function(xs::Vector)

roundtoint64(xs[1])^2 + roundtoint64(xs[2])^2

end

x, y = linspace(-5, 5, 100), linspace(-5, 5, 100)

z = Surface((x,y)->quad_function([x,y]), x, y)

surface(x,y,z, linealpha = 0.3)

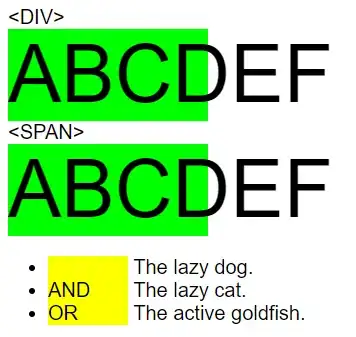

This is how my quad_function looks like:

The problem is, that the optimize functions converges immediately and does not proceed.

using Optim

res = Optim.optimize(

quad_function,

[5.0,5.0],

Newton(),

OptimizationOptions(

autodiff = true,

# show_trace = true

))

result:

Results of Optimization Algorithm

* Algorithm: Newton's Method

* Starting Point: [5.0,5.0]

* Minimizer: [5.0,5.0]

* Minimum: 5.000000e+01

* Iterations: 0

* Convergence: true

* |x - x'| < 1.0e-32: false

* |f(x) - f(x')| / |f(x)| < 1.0e-32: false

* |g(x)| < 1.0e-08: true

* Reached Maximum Number of Iterations: false

* Objective Function Calls: 1

* Gradient Calls: 1

optimal_values = Optim.minimizer(res) # [5.0, 5.0]

optimum = Optim.minimum(res) # 50.0

I also tried to initialize the optimize function with a vector of integers [5,5] to avoid rounding things, but that causes also problems finding the initial step size in:

ERROR: InexactError()

in alphainit(::Int64, ::Array{Int64,1}, ::Array{Int64,1}, ::Int64) at /home/sebastian/.julia/v0.5/Optim/src/linesearch/hz_linesearch.jl:63

in optimize(::Optim.TwiceDifferentiableFunction, ::Array{Int64,1}, ::Optim.Newton, ::Optim.OptimizationOptions{Void}) at /home/sebastian/.julia/v0.5/Optim/src/newton.jl:69

in optimize(::Function, ::Array{Int64,1}, ::Optim.Newton, ::Optim.OptimizationOptions{Void}) at /home/sebastian/.julia/v0.5/Optim/src/optimize.jl:169

Question: Is there a way to tell optimize to only explore the integer space?

Update:

I think the problem with the converting approach to Int64 is that I no longer have ForwardDiff.Duals and thus can't compute any derivatives/gradients. How could a better round function look like, which rounds also nested duals and gives back duals?