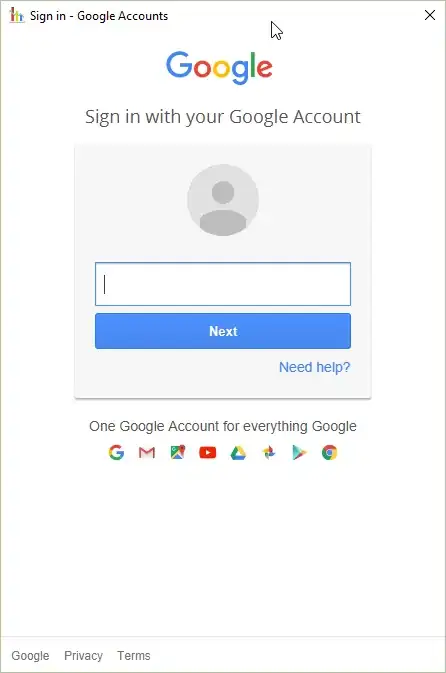

I'm trying to implement linear gradient-descent Sarsa based on Sutton & Barto's Book, see the algorithm in the picture below.

However, I struggle to understand something in the algorithm:

- Is the dimension of w and z independent of how many different actions can be taken? It seems in the book they have dimension equal to the number of features, which I would say is independent on how many actions.

- Is there a w and a z for each action? Also, I cannot see in the book that this should be the case.

- If I am right in the two bullets above, then I cannot see how the index list F_a will depend on the actions, and therefore I cannot see how the action-value function q_a can depend on the actions (see the lines marked with yellow below in the algorithm) But the action-value must depend on the actions. So there is something I am not getting...

I hope anyone can help clarifying this for me :)