The overall process is:

- Load the data into a tool

- Draw a shape. E.G. Using a polygon tool or other shape tools

- Export the points and use them for training directly, or convert them into a dense pixel mask.

Annotation Process Detail

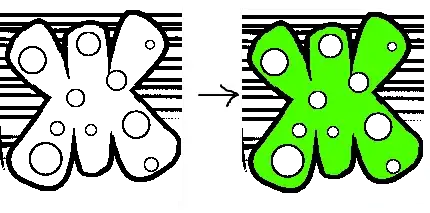

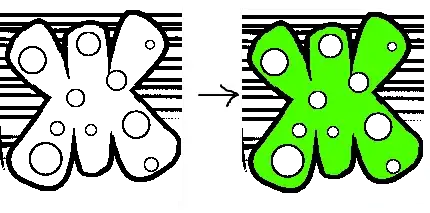

Generally speaking, vector based tools are preferred because they are more accurate.

For example on the left is 4 polygon points and on the right is a brush.

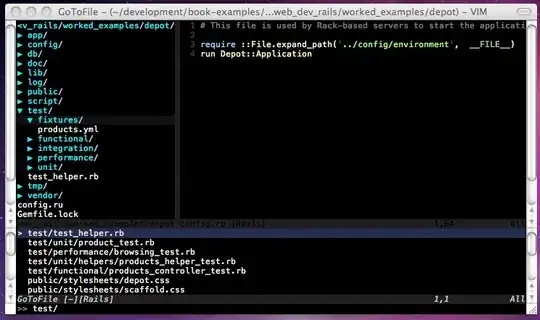

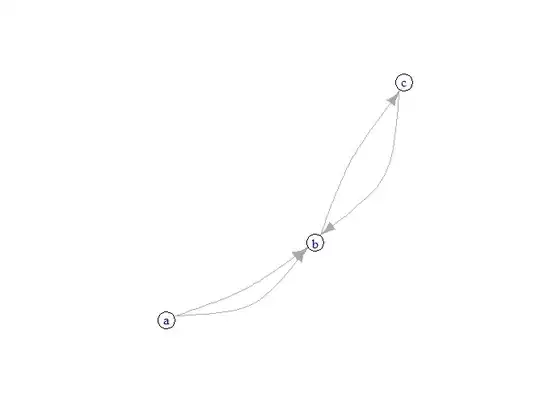

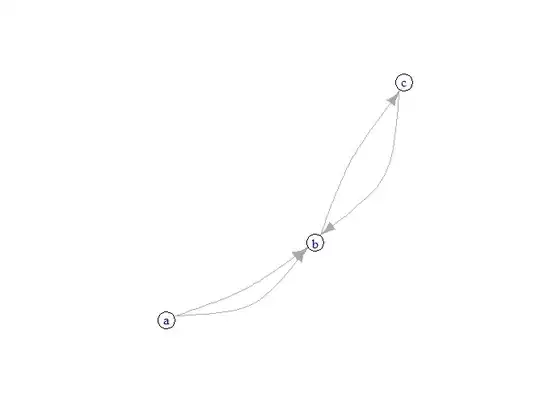

Counter intuitively it can even be faster to trace out the points. For example here, I simply moved my mouse over the outline which records this:

But it's actually stored as points:

Other shapes are used

For example, you can use an ellipse or a circle to create segmentation masks.

There's no requirement to actually manually create a polygon.

Autobordering

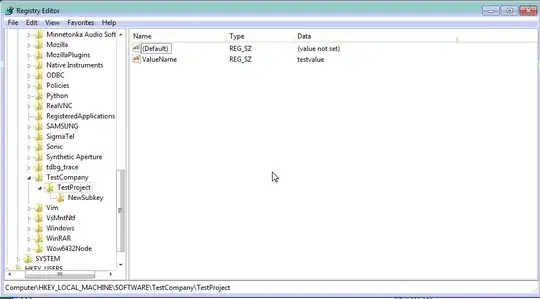

One of the main concepts behind wanting to get to 100% coverage of pixels is a concept called autobordering. Basically it just means that the edges of objects intersect well. In Diffgram for example you can select two points and it will create a matching border.

Here's an example:

Finally there are many other concepts like pre-labeling, copying to next, tracking etc.

Tooling

Diffgram's Segmentation Labeling Software is a great tool option for Image and Video Annotation. It's open-core, you can run it on your kubernetes cluster.