I'm reading an image segmentation paper in which the problem is approached using the paradigm "signal separation", the idea that a signal (in this case, an image) is composed of several signals (objects in the image) as well as noise, and the task is to separate out the signals (segment the image).

The output of the algorithm is a matrix,  which represents a segmentation of an image into M components. T is the total number of pixels in the image,

which represents a segmentation of an image into M components. T is the total number of pixels in the image,  is the value of the source component (/signal/object) i at pixel j

is the value of the source component (/signal/object) i at pixel j

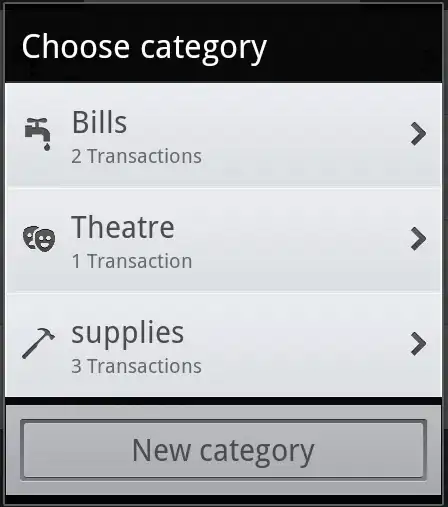

In the paper I'm reading, the authors wish to select a component m for ![m \in [1,M]](../../images/3810135142.webp) which matches certain smoothness and entropy criteria. But I'm failing to understand what entropy is in this case.

which matches certain smoothness and entropy criteria. But I'm failing to understand what entropy is in this case.

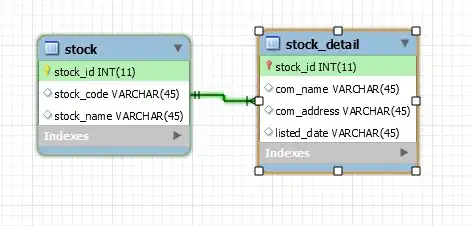

Entropy is defined as the following:

and they say that '' are probabilities associated with the bins of the histogram of

are probabilities associated with the bins of the histogram of  ''

''

The target component is a tumor and the paper reads: "the tumor related component  with "almost" constant values is expected to have the lowest value of entropy."

with "almost" constant values is expected to have the lowest value of entropy."

But what does low entropy mean in this context? What does each bin represent? What does a vector with low entropy look like?