There are two parts of this question, one related to plotting, and one to nets themselves. Lets start with the second part, you need to understand that:

- a single neuron, without any activation or with sigmoid on it is a linear model. In order to have nonlinearity you either need non-monotonic activations (like rbf) or at least 1 hidden layer.

some logic gates are linear, and some are not. In particular OR is linear (as well as AND), but at the same time XOR is not. The proof is really simple (for linearity of OR) since it can be implemented as

cl(x) = x1 + x2 - 0.5

if you now take the sign of the above equation you will see that it is 1 iff x1+x2>0.5, and obviously this happens (among other cases) when at least one is 1 and other is 0.

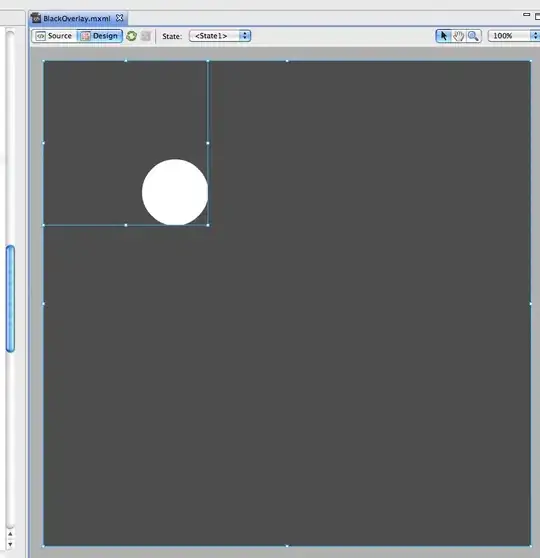

In terms of decision boundaries. For linear models it is straight forward because one can determine analyticaly the decision boundary, however if one has nonlinear model in general it is not possible. Thus what we do is an approximation, you want to plot decision boundary on a plane, for x1 e [-T, T] and x2 e [-T, T] so what you do - you just sample very densily points from the inputs space (like (-T, -T), (-T+0.01, -T+0.01), ...) and check the classification. You get a huge matrix of 0 and 1 and you just plot a countur plot of this function.

I would like to compute a non linear boundary with sigmoid neurons with an input layer and output layer. The neuron has 2 inputs x1, x2 and a bias. I am trying to compute this.

I would like to compute a non linear boundary with sigmoid neurons with an input layer and output layer. The neuron has 2 inputs x1, x2 and a bias. I am trying to compute this.