first a few basic information:

OS: Win7 64Bit | GPU: GTX970

I have a staging ID3D11Texture2D wich I would like to encode.

I would like to use the texture directly via nvEncRegisterResource but it seems that i could only pass a D3D9 and no D3D11 texture. Otherwise i get a NV_ENC_ERR_UNIMPLEMENTED.

Thefore, I create an input buffer and fill it manually.

The texture is in the format DXGI_FORMAT_R8G8B8A8_UNORM. The format DXGI_FORMAT_NV12 is possibly only from Windows 8 onwards.

The input buffer format is NV_ENC_BUFFER_FORMAT_ARGB. This should also be 8 bits per color channel. Since the alpha value is interchanged I expect a wrong picture but it should still be encoded.

My process so far:

- Create ID3D11Texture2D render texture

- Create ID3D11Texture2D staging texture

- Create NVENC input buffer with nvEncCreateInputBuffer

- Create NVENC output buffer with nvEncCreateBitstreamBuffer

:: Update routine ::

- Render into render texture

- CopyResource() to staging texture

- Fill NVENC input buffer from staging texture

- Encode frame

- Get data from NVENC output buffer

As you can see, I encode only one frame per update.

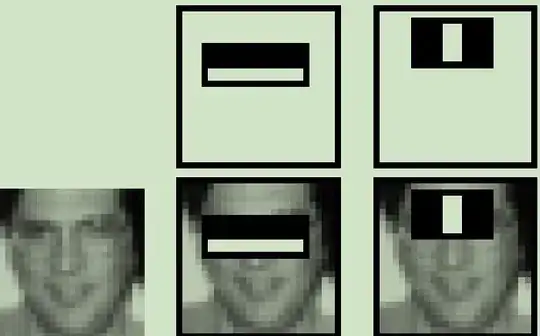

So far everything works without error, but if i look at the frame the picture size is wrong.

That should be 1280 x 720. If I also try to pass more than one frame then I get a broken file.

I understand that in H.264 my first frame is an I-frame and the following should be a P-frame.

Now my code:

:: Create input buffer

NVENCSTATUS RenderManager::CreateInputBuffer()

{

NVENCSTATUS nvStatus = NV_ENC_SUCCESS;

NV_ENC_CREATE_INPUT_BUFFER createInputBufferParams = {};

createInputBufferParams.version = NV_ENC_CREATE_INPUT_BUFFER_VER;

createInputBufferParams.width = m_width;

createInputBufferParams.height = m_height;

createInputBufferParams.memoryHeap = NV_ENC_MEMORY_HEAP_SYSMEM_CACHED;

createInputBufferParams.bufferFmt = NV_ENC_BUFFER_FORMAT_ARGB;

nvStatus = m_pEncodeAPI->nvEncCreateInputBuffer(m_pEncoder, &createInputBufferParams);

if (nvStatus != NV_ENC_SUCCESS) return nvStatus;

m_pEncoderInputBuffer = createInputBufferParams.inputBuffer;

return nvStatus;

}

:: Create output buffer

NVENCSTATUS RenderManager::CreateOutputBuffer()

{

NVENCSTATUS nvStatus = NV_ENC_SUCCESS;

NV_ENC_CREATE_BITSTREAM_BUFFER createBitstreamBufferParams = {};

createBitstreamBufferParams.version = NV_ENC_CREATE_BITSTREAM_BUFFER_VER;

createBitstreamBufferParams.size = 2 * 1024 * 1024;

createBitstreamBufferParams.memoryHeap = NV_ENC_MEMORY_HEAP_SYSMEM_CACHED;

nvStatus = m_pEncodeAPI->nvEncCreateBitstreamBuffer(m_pEncoder, &createBitstreamBufferParams);

if (nvStatus != NV_ENC_SUCCESS) return nvStatus;

m_pEncoderOutputBuffer = createBitstreamBufferParams.bitstreamBuffer;

return nvStatus;

}

:: Fill input buffer

NVENCSTATUS RenderManager::WriteInputBuffer(ID3D11Texture2D* pTexture)

{

NVENCSTATUS nvStatus = NV_ENC_SUCCESS;

HRESULT result = S_OK;

// get data from staging texture

D3D11_MAPPED_SUBRESOURCE mappedResource;

result = m_pContext->Map(pTexture, 0, D3D11_MAP_READ, 0, &mappedResource);

if (FAILED(result)) return NV_ENC_ERR_GENERIC;

m_pContext->Unmap(pTexture, 0);

// lock input buffer

NV_ENC_LOCK_INPUT_BUFFER lockInputBufferParams = {};

lockInputBufferParams.version = NV_ENC_LOCK_INPUT_BUFFER_VER;

lockInputBufferParams.inputBuffer = m_pEncoderInputBuffer;

nvStatus = m_pEncodeAPI->nvEncLockInputBuffer(m_pEncoder, &lockInputBufferParams);

if (nvStatus != NV_ENC_SUCCESS) return nvStatus;

unsigned int pitch = lockInputBufferParams.pitch;

//ToDo: Convert R8G8B8A8 to A8R8G8B8

// write into buffer

memcpy(lockInputBufferParams.bufferDataPtr, mappedResource.pData, m_height * mappedResource.RowPitch);

// unlock input buffer

nvStatus = m_pEncodeAPI->nvEncUnlockInputBuffer(m_pEncoder, m_pEncoderInputBuffer);

return nvStatus;

}

:: Encode frame

NVENCSTATUS RenderManager::EncodeFrame()

{

NVENCSTATUS nvStatus = NV_ENC_SUCCESS;

int8_t* qpDeltaMapArray = NULL;

unsigned int qpDeltaMapArraySize = 0;

NV_ENC_PIC_PARAMS encPicParams = {};

encPicParams.version = NV_ENC_PIC_PARAMS_VER;

encPicParams.inputWidth = m_width;

encPicParams.inputHeight = m_height;

encPicParams.inputBuffer = m_pEncoderInputBuffer;

encPicParams.outputBitstream = m_pEncoderOutputBuffer;

encPicParams.bufferFmt = NV_ENC_BUFFER_FORMAT_ARGB;

encPicParams.pictureStruct = NV_ENC_PIC_STRUCT_FRAME;

encPicParams.qpDeltaMap = qpDeltaMapArray;

encPicParams.qpDeltaMapSize = qpDeltaMapArraySize;

nvStatus = m_pEncodeAPI->nvEncEncodePicture(m_pEncoder, &encPicParams);

return nvStatus;

}

:: Read from output buffer

NVENCSTATUS RenderManager::ReadOutputBuffer()

{

NVENCSTATUS nvStatus = NV_ENC_SUCCESS;

// lock output buffer

NV_ENC_LOCK_BITSTREAM lockBitstreamBufferParams = {};

lockBitstreamBufferParams.version = NV_ENC_LOCK_BITSTREAM_VER;

lockBitstreamBufferParams.doNotWait = 0;

lockBitstreamBufferParams.outputBitstream = m_pEncoderOutputBuffer;

nvStatus = m_pEncodeAPI->nvEncLockBitstream(m_pEncoder, &lockBitstreamBufferParams);

if (nvStatus != NV_ENC_SUCCESS) return nvStatus;

void* pData = lockBitstreamBufferParams.bitstreamBufferPtr;

unsigned int size = lockBitstreamBufferParams.bitstreamSizeInBytes;

// read from buffer

PlatformManager::SaveToFile("TEST", static_cast<char*>(pData), size);

// unlock output buffer

nvStatus = m_pEncodeAPI->nvEncUnlockBitstream(m_pEncoder, m_pEncoderOutputBuffer);

return nvStatus;

}

m_width and m_height are always 1280 and 720.

Why is the image size wrong and how i have to fill the input buffer with more than one frame ?

Many thanks for your help,

Alexander