I have two gpu (TitanX (Pascal) and GTX 1080). I am trying to run single-thread graph computation. The graph is two separate matrix multiplication chains (each assigned to corresponding gpu).

Here is the code that I'm using:

import tensorflow as tf import numpy as np import random import time import logging

from tensorflow.python.ops import init_ops

from tensorflow.python.client import timeline

def test():

n = 5000

with tf.Graph().as_default():

A1 = tf.placeholder(tf.float32, shape=[n, n], name='A')

A2 = tf.placeholder(tf.float32, shape=[n, n], name='A')

with tf.device('/gpu:0'):

B1 = A1

for l in xrange(10):

B1 = tf.matmul(B1, A1)

with tf.device('/gpu:1'):

B2 = A2

for l in xrange(10):

B2 = tf.matmul(B2, A2)

C = tf.matmul(B1, B2)

run_metadata = tf.RunMetadata()

with tf.Session(config=tf.ConfigProto(log_device_placement=True)) as sess:

start = time.time()

logging.info('started')

A1_ = np.random.rand(n, n)

A2_ = np.random.rand(n, n)

sess.run([C],

feed_dict={A1: A1_, A2: A2_},

options=tf.RunOptions(trace_level=tf.RunOptions.FULL_TRACE),

run_metadata=run_metadata)

logging.info('writing trace')

trace = timeline.Timeline(step_stats=run_metadata.step_stats)

trace_file = open('timeline.ctf.json', 'w')

trace_file.write(trace.generate_chrome_trace_format())

logging.info('trace written')

end = time.time()

logging.info('computed')

logging.info(end - start)

if __name__ == "__main__":

logging.basicConfig(level=logging.INFO, format='%(asctime)s %(message)s')

test()

- It takes 20.4 secs to finish.

- It takes 14 secs to finish if I set all ops to gpu0 (TitanX).

- It takes 19.8 secs to finish if I set all ops to gpu1 (GTX 1080).

I can see that tensorflow found both gpus and have set all devices correctly. Why is there no speed up for using two gpu instead of one? Could be the problem that the gpus are different models (AFAIK cuda allows it)?

Thanks.

EDIT I updated code to use different initial matrices for both chains since otherwise tensorflow seems to do some optimizations.

Here is a timeline profile json-file link: https://api.myjson.com/bins/23csi

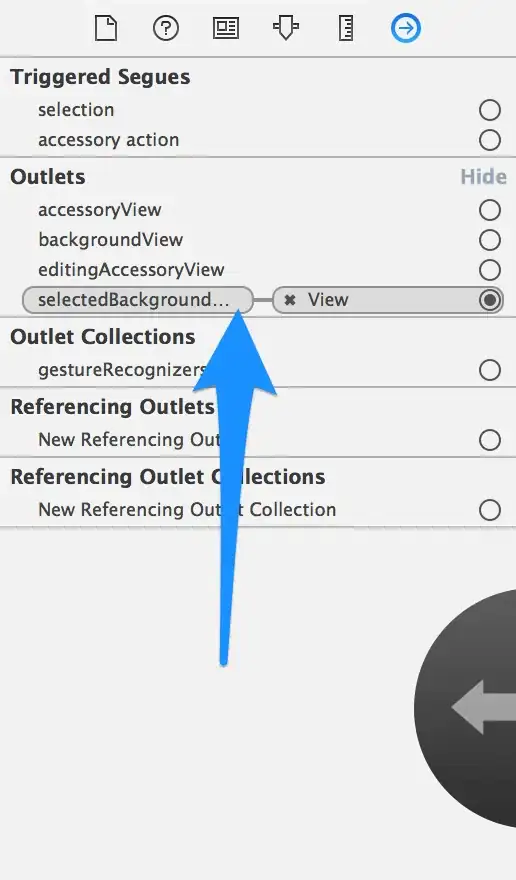

This timeline raises more questions than answers:

- Why pid 7 (gpu0) has two lines of execution?

- What are long MatMuls in pid 3 and 5? (input0 "_recv_A_0/_3", input1 "_recv_A_0/_3", name "MatMul", op "MatMul")

- It seems that every op is executed on gpu0 execept pid 5.

- There are a lot of small MatMul ops (can't be seen from the screenshot) right after the long MatMuls ops from pid 3 and pid 5. What is this?