I don't understand how to use LDA just for dimensionality reduction.

I have a 75x65 matrix with 64 features and 1 column for the class index. This matrix can be found here.

I am trying to use LDA for dimensionality reduction, using this function from sklearn.

def classify(featureMatrix):

X, y = featureMatrix[:, :63], featureMatrix[: ,64]

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.20)

lda = LinearDiscriminantAnalysis(n_components=2)

rf = RandomForestClassifier(n_estimators=10, criterion="gini", max_depth=20)

X_train = lda.fit_transform(X_train, y_train)

X_test = lda.transform(X_test)

rf.fit(X_train, y_train)

print rf.score(X_test, y_test)

However, my classification score is usually low (20-30%). The problem seems to be when I transform the test data.

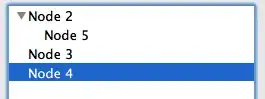

For example, when I plot X_train after the dimensionality reduction I have:

Which has good class separation.

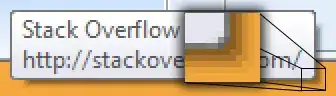

But when I transform the test set and plot X_test, I have this:

Which has no apparent pattern and is far from what we could see in our training dataset.

I hypothesise that this could be a result of a small dataset (only 75 samples equally distributed in 5 classes), but this data is really difficult to gather unfortunately.

I've read from different places people using LDA over all the dataset before trying to separate the dataset in training/test sets and classify it with another classifier (This way I could achieve less than 10% of error), but I also heard a lot of people saying I should use the way I mentioned in the code. If I am only using LDA for dimensionality reduction, which way is correct?