I created a wav parser. I read the sample information out of the data chunk like this:

while (offset < size)

{

var value = 0;

switch (bits_channel)

{

case 8:

value = data.SubArray(offset, 1)[0];

break;

case 16:

value = BitConverter.ToInt16(data.SubArray(offset, 2), 0x0);

break;

default:

value = BitConverter.ToInt32(data.SubArray(offset, bits_channel / 8), 0x0);

break;

}

samples[channel].Add(value);

//Tools.Log("Read value: " + samples[channel].Last() + ", " + bits_channel);

offset += bits_channel;

channel = (channel + 1) % numchannels;

}

where SubArray is a function, which creates (obviously) a subarray with startindex and length, and offset is the current offset in bits in the data.

My test file looks like this in audacity:

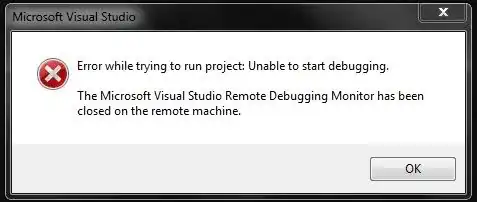

but like this when I read it into my parser:

So basically you see, where the great amplitude is on the timeline, but where it is almost completely silent in audacity, it appears as maximul amplitude in my program. What could potentially be the issue?

>`, which stores the sample information like `samples[channel][samplenum]` and channels is an integer which gets increased everytime I read a sample value modulo the number of channels

– Tom Doodler Oct 27 '16 at 18:10