It's clear by now that all steps from a transformation are executed in parallel and there's no way to change this behavior in Pentaho.

Given that, we have a scenario with a switch task that checks a specific field (read from a filename) and decides which task (mapping - sub-transformation) will process that file. This is part of a generic logic that, before and after each mapping task, does some boilerplate tasks as updating DB records, sending emails, etc.

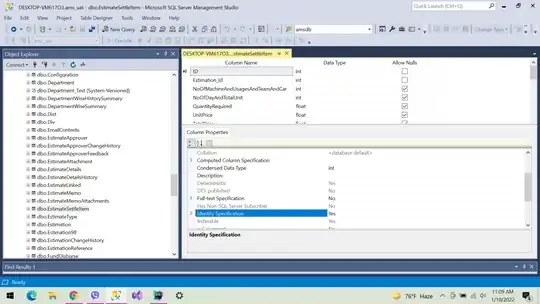

The problem is: if we have no "ACCC014" files, this transformation cannot be executed. I understand it's not possible, as all tasks are executed in parallel, so the second problem arises: inside SOME mappings, XML files are created. And even when Pentaho is executing this task with empty data, we can't find a way of avoiding the XML output file creation.

The problem is: if we have no "ACCC014" files, this transformation cannot be executed. I understand it's not possible, as all tasks are executed in parallel, so the second problem arises: inside SOME mappings, XML files are created. And even when Pentaho is executing this task with empty data, we can't find a way of avoiding the XML output file creation.

We thought about moving this switch logic to the job, as in theory it's serial, but found no conditional step that would do this kind of distinction.

We also looked to Meta Data Injection task, but we don't believe it's the way to go. Each sub-transformation does really different jobs. Some of them update some tables, other ones write files, other ones move data between different databases. All of them receive some file as input and return a send_email flag and a message string. Nothing else.

Is there a way to do what we are willing for? Or there is no way to reuse part of a logic based on default inputs/outputs?

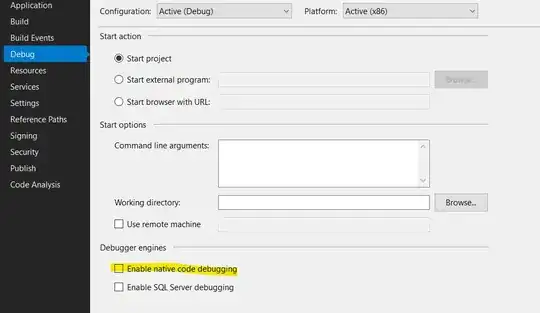

Edit: adding ACCC014 transformation. Yes, the "Do not create file at start" option is checked.