I have a function find_country_from_connection_ip which takes an ip, and after some processing returns a country. Like below:

def find_country_from_connection_ip(ip):

# Do some processing

return county

I am using the function inside apply method. like below:

df['Country'] = df.apply(lambda x: find_country_from_ip(x['IP']), axis=1)

As it is pretty straightforward, what I want is to evaluate a new column from an existing column in the DataFrame which has >400000 rows.

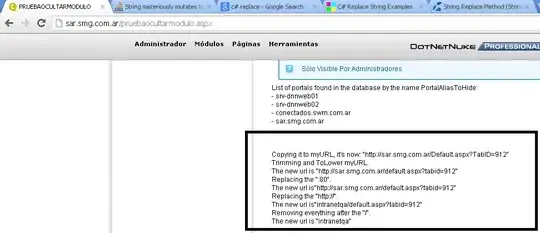

It runs, but terribly slow and throws an exception like below:

...........: SettingWithCopyWarning: A value is trying to be set on a copy of a slice from a DataFrame. Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: http://pandas.pydata.org/pandas-docs/stable/indexing.html#indexing-view-versus-copy

if name == 'main': In [38]:

I understand the problem, but can't quite figure out how to use loc with apply and lambda.

N.B. Please suggest if you have a more efficient alternative solution, which can bring the end result.

**** EDIT ********

The function is mainly a lookup on mmdb database like below:

def find_country_from_ip(ip):

result = subprocess.Popen("mmdblookup --file GeoIP2-Country.mmdb --ip {} country names en".format(ip).split(" "), stdout=subprocess.PIPE).stdout.read()

if result:

return re.search(r'\"(.+?)\"', result).group(1)

else:

final_output = subprocess.Popen("mmdblookup --file GeoIP2-Country.mmdb --ip {} registered_country names en".format(ip).split(" "), stdout=subprocess.PIPE).stdout.read()

return re.search(r'\"(.+?)\"', final_output).group(1)

This is nevertheless a costly operation, and when you have a DataFrame with >400000 rows, it should take time. But how much? That is the question. It takes about 2 hours which is pretty much I think.