I'm attempting to perform a cohort analysis on a very large table. I have a test table with ~30M rows (over double in production). The query fails in BigQuery stating "resources exceeded.." and it's a tier 18 query (tier 1 is $5, so it's a $90 query!)

The query:

with cohort_active_user_count as (

select

DATE(`BQ_TABLE`.created_at, '-05:00') as created_at,

count(distinct`BQ_TABLE`.bot_user_id) as count,

`BQ_TABLE`.bot_id as bot_id

from `BQ_TABLE`

group by created_at, bot_id

)

select created_at, period as period,

active_users, retained_users, retention, bot_id

from (

select

DATE(`BQ_TABLE`.created_at, '-05:00') as created_at,

DATE_DIFF(DATE(future_message.created_at, '-05:00'), DATE(`BQ_TABLE`.created_at, '-05:00'), DAY) as period,

max(cohort_size.count) as active_users, -- all equal in group

count(distinct future_message.bot_user_id) as retained_users,

count(distinct future_message.bot_user_id) / max(cohort_size.count) as retention,

`BQ_TABLE`.bot_id as bot_id

from `BQ_TABLE`

left join `BQ_TABLE` as future_message on

`BQ_TABLE`.bot_user_id = future_message.bot_user_id

and `BQ_TABLE`.created_at < future_message.created_at

and TIMESTAMP_ADD(`BQ_TABLE`.created_at, interval 720 HOUR) >= future_message.created_at

and `BQ_TABLE`.bot_id = future_message.bot_id

left join cohort_active_user_count as cohort_size on

DATE(`BQ_TABLE`.created_at, '-05:00') = cohort_size.created_at

and `BQ_TABLE`.bot_id = cohort_size.bot_id

group by 1, 2, bot_id) t

where period is not null

and bot_id = 80

order by created_at, period, bot_id

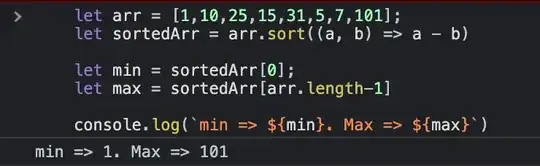

Here is the desired output:

From my understanding of BigQuery, the joins are causing a major performance hit because each BigQuery node needs to process them. The table is partitioned by day, which I'm not yet making use of in this query, but I know it will still need to be optimized.

How can this query be optimized or exclude the use of joins to allow BigQuery to process more efficiently in parallel?