I have granular data stored in Redshift. I want an aggregate table created regularly. I'm seeking to use AWS Data Pipeline to do this.

Let's say for conversation that I have a table of all flights. I want to generate a table of airports and the number of flights originating there. But because this table is large (and maybe I want to join in stuff from other tables), rather than write out this aggregation in every spot, I decide to build a derived table from it.

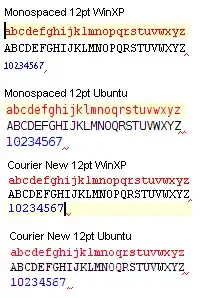

I set up my Data Pipeline. It looks like so

It is running this SQL in the Create Table Sql field

CREATE TABLE IF NOT EXISTS data.airports (

airport_id int not null

,flights int null);

I am able to save it (no errors), but after I activate it, that table never shows up. I've checked the (few) parameters involved, but nothing stands out as obviously wrong. Even so, I tweaked some but still no table.

What should I start looking?