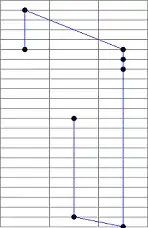

I have a feature vector of 180 elements, and have applied a PCA on it. The problem is that the first pc has a high variance, but according to this biplot diagram for pc1 vs pc2, it seems that this is happening because of an outlier. Which is strange to me.

Apparently the first PC is not the best indicator for classification here.

Here is also the biplot diagram for pc2 vs pc3:

I am using R for this. Any suggestion why is this happening and how I can solve this? Should I remove the outliers? If yes what is the best way to do so by R.

--Edit

I am using prcomp(features.df, center= TRUE, scale = TRUE) to normalize the data.