Trying to implement my custom loss layer using python layer,caffe. I've used this example as the guide and have wrote the forward function as follow:

def forward(self,bottom,top):

score = 0;

self.mult[...] = np.multiply(bottom[0].data,bottom[1].data)

self.multAndsqrt[...] = np.sqrt(self.mult)

top[0].data[...] = -math.log(np.sum(self.multAndsqrt))

However, the second task, that is implementing the backward function is kinda much difficult for me as I'm totally unfamiliar with python. So please help me with coding the backward section.

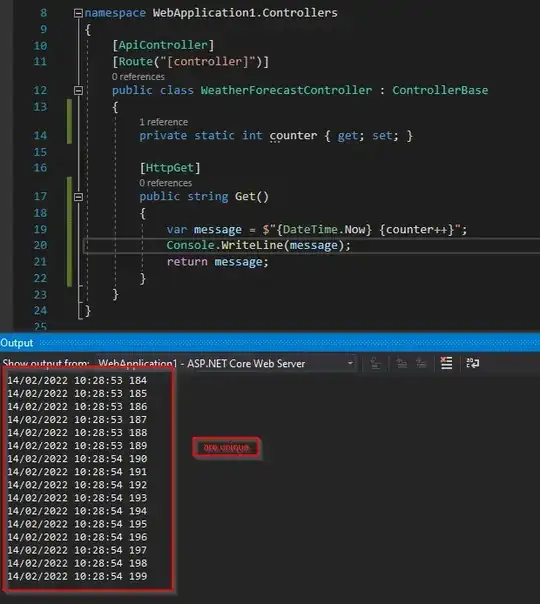

Here is the cost function and its derivative for stocashtic gradient decent to be implemented:

Note that p[i] in the table indicates the ith output neuron value.