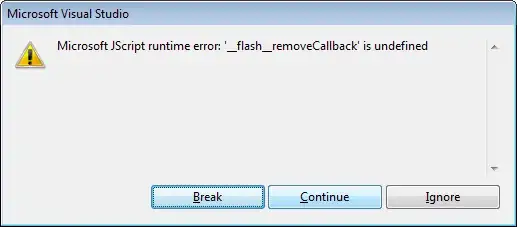

So I'm trying to implement Deep Q-learning algorithm created by Google DeepMind and I think I have got a pretty good hang of it now. Yet there is still one (pretty important) thing I don't really understand and I hope you could help.

Doesn't yj result to a double (Java) and the latter part to a matrix containing Q-values for each action in current state in the following line (4th last line in the algorithm):

So how can I subtract them from each other.

Should I make yj a matrix containing all the data from here  except replace the currently selected action with

except replace the currently selected action with

This doesn't seem like the right answer and I'm a bit lost here as you can see.