I'm trying to count the number of occurences of ints, one to six inclusive, in an array of size 6. I want to return an array with the number of times an int appears in each index, but with one at index zero.

Example:

Input: [3,2,1,4,5,1,3]

Expected output: [2,1,2,1,1,0].

Problem:

It outputs [1,1,3,0,1,0] with the code excerpt below. How can I fix this? I can't find where I'm going wrong.

public static int arrayCount(int[] array, int item) {

int amt = 0;

for (int i = 0; i < array.length; i++) {

if (array[i] == item) {

amt++;

}

}

return amt;

}

public int[] countNumOfEachScore(){

int[] scores = new int[6];

int[] counts = new int[6];

for (int i = 0; i < 6; i++){

scores[i] = dice[i].getValue();

}

for (int j = 0; j < 6; j++){

counts[j] = arrayCount(scores, j+1);

}

return counts;

}

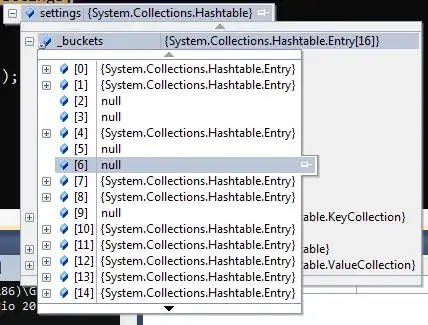

dice[] is just an array of Die objects, which have a method getValue() which returns an int between 1 and 6, inclusive. counts[] is the int array with the wrong contents.