I am trying to save the data of a Spark SQL dataframe to hive. The data that is to be stored should be partitioned by one of the columns in the dataframe. For that I have written the following code.

val conf = new SparkConf().setAppName("Hive partitioning")

conf.set("spark.scheduler.mode", "FAIR")

val sc = new SparkContext(conf)

val hiveContext = new HiveContext(sc)

hiveContext.setConf("hive.exec.dynamic.partition", "true")

hiveContext.setConf("hive.exec.dynamic.partition.mode", "nonstrict")

val df = hiveContext.sql(".... my sql query ....")

df.printSchema()

df.write.mode(SaveMode.Append).partitionBy("<partition column>").saveAsTable("orgs_partitioned")

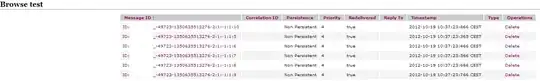

The dataframe is getting stored as table with single column called col and of type array<string>, the structure is as shown below(Screenshot from Hue).

Any pointers are very helpful. Thanks.