I want to calibrate a car video recorder and use it for 3D reconstruction with Structure from Motion (SfM). The original size of the pictures I have took with this camera is 1920x1080. Basically, I have been using the source code from the OpenCV tutorial for the calibration.

But there are some problems and I would really appreciate any help.

So, as usual (at least in the above source code), here is the pipeline:

- Find the chessboard corner with

findChessboardCorners - Get its subpixel value with

cornerSubPix - Draw it for visualisation with

drawhessboardCorners - Then, we calibrate the camera with a call to

calibrateCamera - Call the

getOptimalNewCameraMatrixand theundistortfunction to undistort the image

In my case, since the image is too big (1920x1080), I have resized it to 640x320 (during SfM, I will also use this size of image, so, I don't think it would be any problem). And also, I have used a 9x6 chessboard corners for the calibration.

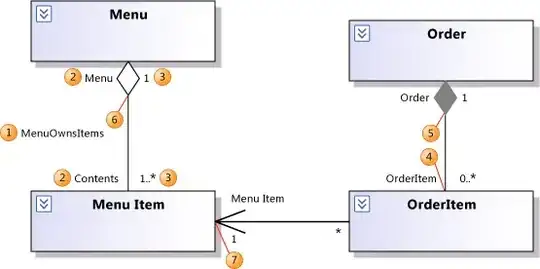

Here, the problem arose. After a call to the getOptimalNewCameraMatrix, the distortion come out totally wrong. Even the returned ROI is [0,0,0,0]. Below is the original image and its undistorted version:

You can see the image in the undistorted image is at the bottom left.

You can see the image in the undistorted image is at the bottom left.

But, if I didn't call the getOptimalNewCameraMatrix and just straight undistort it, I got a quite good image.

So, I have three questions.

Why is this? I have tried with another dataset taken with the same camera, and also with my iPhone 6 Plus, but the results are same as above.

Another question is, what is the

getOptimalNewCameraMatrixdoes? I have read the documentations several times but still cannot understand it. From what I have observed, if I didn't call thegetOptimalNewCameraMatrix, my image will retain its size but it would be zoomed and blurred. Can anybody explain this function in more detail for me?For SfM, I guess the call to

getOptimalNewCameraMatrixis important? Because if not, the undistorted image would be zoomed and blurred, making the keypoint detection harder (in my case, I will be using the optical flow)?

I have tested the code with the opencv sample pictures and the results are just fine.

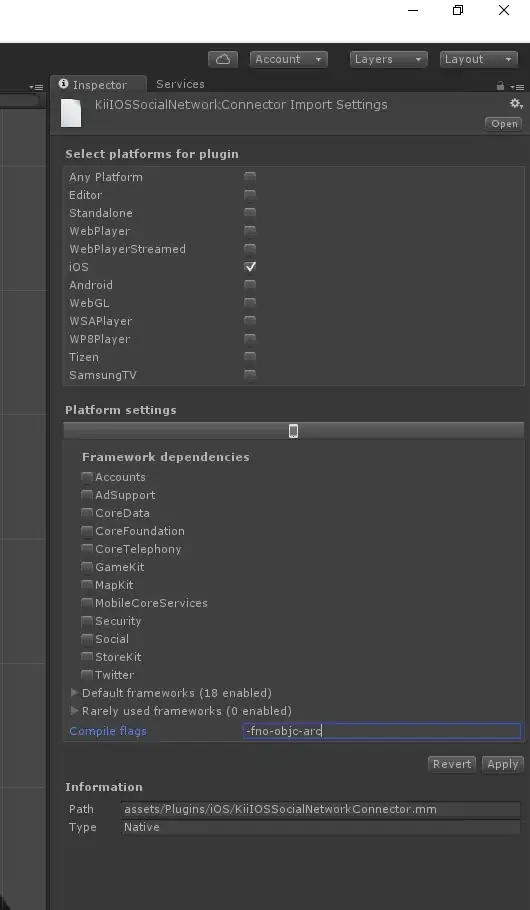

Below is my source code:

from sys import argv

import numpy as np

import imutils # To use the imutils.resize function.

# Resizing while preserving the image's ratio.

# In this case, resizing 1920x1080 into 640x360.

import cv2

import glob

# termination criteria

criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 30, 0.001)

# prepare object points, like (0,0,0), (1,0,0), (2,0,0) ....,(6,5,0)

objp = np.zeros((9*6,3), np.float32)

objp[:,:2] = np.mgrid[0:9,0:6].T.reshape(-1,2)

# Arrays to store object points and image points from all the images.

objpoints = [] # 3d point in real world space

imgpoints = [] # 2d points in image plane.

images = glob.glob(argv[1] + '*.jpg')

width = 640

for fname in images:

img = cv2.imread(fname)

if width:

img = imutils.resize(img, width=width)

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

# Find the chess board corners

ret, corners = cv2.findChessboardCorners(gray, (9,6),None)

# If found, add object points, image points (after refining them)

if ret == True:

objpoints.append(objp)

corners2 = cv2.cornerSubPix(gray,corners,(11,11),(-1,-1),criteria)

imgpoints.append(corners2)

# Draw and display the corners

img = cv2.drawChessboardCorners(img, (9,6), corners2,ret)

cv2.imshow('img',img)

cv2.waitKey(500)

cv2.destroyAllWindows()

ret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(objpoints, imgpoints, gray.shape[::-1],None,None)

for fname in images:

img = cv2.imread(fname)

if width:

img = imutils.resize(img, width=width)

h, w = img.shape[:2]

newcameramtx, roi=cv2.getOptimalNewCameraMatrix(mtx,dist,(w,h),1,(w,h))

# undistort

dst = cv2.undistort(img, mtx, dist, None, newcameramtx)

# crop the image

x,y,w,h = roi

dst = dst[y:y+h, x:x+w]

cv2.imshow("undistorted", dst)

cv2.waitKey(500)

mean_error = 0

for i in xrange(len(objpoints)):

imgpoints2, _ = cv2.projectPoints(objpoints[i], rvecs[i], tvecs[i], mtx, dist)

error = cv2.norm(imgpoints[i],imgpoints2, cv2.NORM_L2)/len(imgpoints2)

mean_error += error

print "total error: ", mean_error/len(objpoints)

Already ask someone in answers.opencv.org and he tried my code and my dataset with success. I wonder what is actually wrong.