There is actually a perfectly logical reason why one function proves marginally faster than the other and it has nothing to do with the fact that you removed or added the empty() condition.

These differences are tiny that they have to be measured in microseconds, but there is in fact a difference. The difference is what order the functions are called in, or more specifically, where they're allocated in the PHP VM's heap.

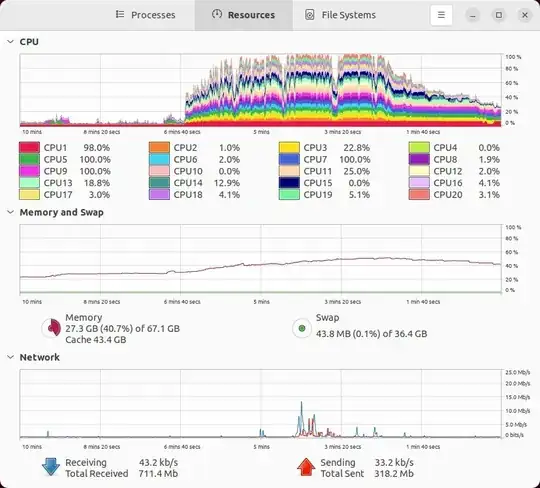

The differences in performance you're experiencing here can actually vary from system to system. So there should be no expectation of identical results. However, there are some expectations based on a finite amount of system specs. From this we can actually reproduce consistent results to prove why there are just a few microseconds in difference between the two functions.

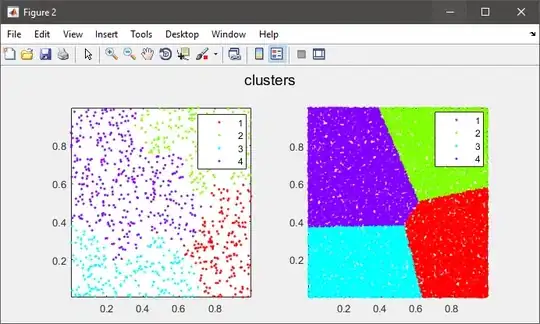

First take a look at this 3v4l where we define your function with and without the empty() condition as validateStringByPrefix1() and validateStringByPrefix2(), respectively. Here we call on validateStringByPrefix2(), first, which results in what appears to be a 40 microsecond execution time. Note, in both cases the function should return false, and empty($prefix) will never be true (just as you have done in your own test). In the second test with use of empty($prefix) it looks like the function actually performed faster at 11 microseconds.

Second, take a look at this 3v4l where we define the same exactly functions, but call on validateStringByPrefix1() first, and get the opposite results. Now it looks like without use of empty($prefix) the function runs slightly faster at 12 microseconds and the other runs slightly slower at 88 microseconds.

Remember that microtime() is not actually an accurate clock. It can fluctuate slightly within a few microseconds, but typically not enough to be an order of magnitude slower or faster on average. So, yes, there is a difference, but no it's not because of the use of empty() or the lack thereof.

Instead this issue has a lot more to do with how a typical x86 architecture works and how your CPU and memory deal with cache. Function's defined in your code will typically be stored into memory by PHP, in the order that they are first executed (compilation step occurs here). The first function to get executed is going to be cached first. There are concepts of write-through cache, for example, with both write allocate and no write allocate that can effect this. The next function to be executed overwrites that cache, causing a very slight slow down in memory, which may or may not be consistent depending on factors I won't get into here.

However, despite all of these minute differences there really is no faster or slower result despite the use or removal of empty() in this code. These differences are just memory access and allocation trade offs every program suffers from.

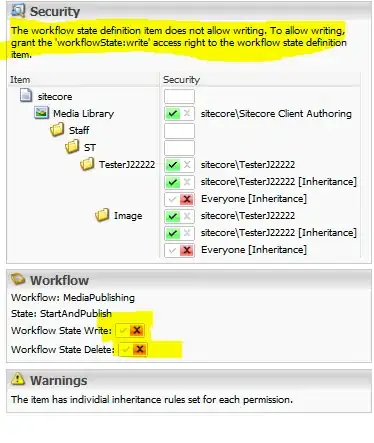

This is why when you really really need to micro-optimize a program's code for the fastest possible execution, you typically go through the painstaking process of doing Profile-guided Optimization or PGO.

Optimization techniques based on analysis of the source code alone are based on general ideas as to possible improvements, often applied without much worry over whether or not the code section was going to be executed frequently though also recognising that code within looping statements is worth extra attention.